Albert Barqué-Duran

Department of Psychology

CITY UNIVERSITY LONDON

A runaway trolley is approaching a fork in the tracks. If the trolley is allowed to run on its current track, a work crew of five will be killed. If the driver steers the train down the other branch, a lone worker will be killed. If you were driving this trolley what would you do? What would a computer or robot driving this trolley do? Autonomous systems are coming whether people like it or not. Will they be ethical? Will they be good? And what do we mean by “good”?

Many agree that artificial moral agents are necessary and inevitable. Others say that the idea of artificial moral agents intensifies their distress with cutting edge technology. There is something paradoxical in the idea that one could relieve the anxiety created by sophisticated technology with even more sophisticated technology. A tension exists between the fascination with technology and the anxiety it provokes. This anxiety could be explained by (1) all the usual futurist fears about technology on a trajectory beyond human control and (2) worries about what this technology might reveal about human beings themselves. The question is not what will technology be like in the future, but rather, what will we be like, what are we becoming as we forge increasingly intimate relationships with our machines. What will be the human consequences of attempting to mechanize moral decision-making?

Nowadays, when computer systems select from among different courses of action, they engage in a kind of decision-making process. The ethical dimensions of this decision-making are largely determined by the values engineers incorporate into the systems, either implicitly or explicitly. Until recently, designers did not consider the ways values were implicitly embedded in the technologies they produced (and we should make them more aware of the ethical dimensions of their work!) but the goal of artificial morality moves engineering activism beyond emphasizing the role of designers’ values in shaping the operational morality of systems to providing the systems themselves with the capacity for explicit moral reasoning and decision-making.

A key distinction regarding moral judgments concerns deontological versus consequentialist decisions. Recent dual-process accounts of moral judgment contrast deontological judgments which are generally driven by automatic/unreflective/intuitive responses, prompted by the emotional content of a given dilemma, with consequentialist responses which are the result of unemotional/rational/controlled reflection, driven by conscious evaluation of the potential outcomes. Are these ethical principles, patterns, theories, and frameworks useful in guiding the design of computational systems capable of acting with some degree of autonomy?

The task of enhancing the moral capabilities of autonomous software agents will force all sorts of scientists and engineers to break down moral decision making into its component parts. Would making a moral robot only be a matter of finding the right set of constraints and the right algorithms for resolving conflicts? For example, a top-down approach takes an ethical theory, say, utilitarianism, analyzes the informational and procedural requirements necessary to implement this theory in a computer system, and applies that analysis to the design of subsystems and the way they relate to each other in order to implement the theory. On the other hand, in bottom-up approaches to machine morality, the emphasis is placed on creating an environment where an agent explores courses of action and learns and is rewarded for behavior that is morally praiseworthy. Unlike top-down ethical theories, which define what is and is not moral, in bottom-up approaches any ethical principles must be discovered or constructed.

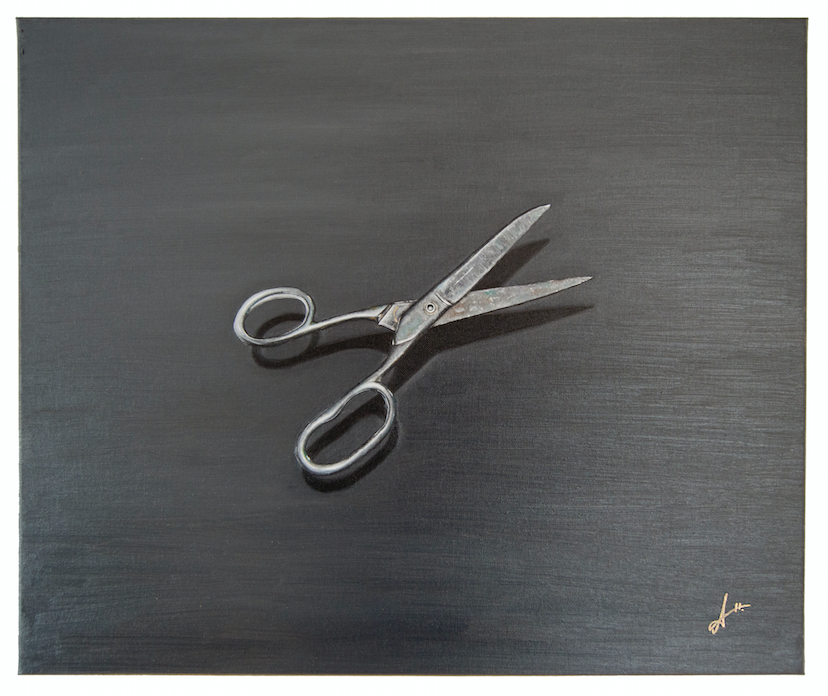

Some claim that utilitarianism is not a particularly useful or practical theory arguing that calculations should be halted at precisely the point where continuing to calculate rather than act has a negative effect on aggregate utility. But how do you know whether a computation is worth doing without actually doing the computation itself? How do we, humans, do it? We generally practice what Herbert Simon, a founder of AI and a Nobel laureate in economics in 1982, called “bounded rationality”, which is the idea that when individuals make decisions, their rationality is limited by the available information, the tractability of the decision problem, the cognitive limitations of their minds and the time available to make the decision. Decision-makers in this view act as satisficers, seeking a satisfactory solution rather than an optimal one. Simon used the analogy/metaphor of a pair of scissors, where one blade represents “cognitive limitations” of actual humans and the other the “structures of the environment”, illustrating how minds compensate for limited resources by exploiting known structural regularity in the environment. The question is whether a more restricted computational system, weighing the same information as a human, would be an adequate moral agent. But just as utilitarians do not agree on how to measure utility, deontologists do not agree on which list of duties apply and contemporary virtue ethicists do not agree on a standard list of virtues that any moral agent should exemplify.

Human-computer interactions are likely to evolve in a dynamic way, and the computerized agents will need to accommodate these changes. The demands of multiapproach systems thus illustrate the relationship between increasing autonomy and the need for more sensitivity to the morally relevant features of different environments. The present challenges reveal a need for the further systematic study of the factors and mediators affecting moral choice that condition the way we perceive and interact with our fast-changing environment and reality. We should focus on the incremental steps arising from present technologies that suggest a need for ethical decision-making capabilities and how the research on human-machine interaction feeds back into humans’ understanding of themselves as moral agents, and of the nature of ethical theory itself.

The particular characteristics of human decision-making are a fundamental aspect of what it means to be human.

References:

Barque-Duran et al. (2015). Patterns and Evolution of Moral Behavior: Moral Dynamics in Everyday Life. Thinking and Reasoning.

Barque-Duran et al. (2016). Contemporary Morality: Moral Judgments in Digital Contexts. (Submitted)

Bonnefon, J., F., et al. (2015). Autonomous Vehicles Need Experimental Ethics: Are We Ready for Utilitarian Cars? arXiv:1510.03346

Gigerenzer, G. (1991). From tools to theories: A heuristic of discovery in cognitive psychology. Psychological Review.

Greene et al. (2001). An fMRI investigation of emotional engagement in moral judgment. Science.

Singer, P. (1991). A companion to ethics. Oxford, England: Blackwell Reference.

Wallach, W., and Allen, C. (2009). Moral Machines: Teaching Robots Right from Wrong. Oxford, England: Oxford University Press.

First of all, I would like to answer the question that is presented in the title of this blog. My answer is yes, humanity does want computers making moral decisions. With that being said, I would like to point out that not everyone trusts this method of machines making ethical decisions, but I feel like a significant amount of people would be okay with this. With the way technology has advanced, especially in recent years, I am confident that computers can be property programmed and designed to where moral decisions can be made by these said machines. This blog talks about the decision-making process when it comes to computers, and goes on to mention that these computers would not just make a quick decision, rather, they would have options and be able to explore them in their decision-making process to come up with the right answer, what ever that may be. We all know that, these days, computers show very intelligent behavior, even more so than that of a human. When it comes to computers having high moral standards, I do not think that it is far-fetched to think that they are capable of that. In our college book titled “Ethics in Human Communication,” the author Richard L. Johannesen says that virtues are described variously as learned, acquired, cultivated, reinforced, and capable of modification (Johannesen 2008). When I read this, I thought to myself that computers definitely possess all of these qualities. With that being said, and as advanced as computers have became, why would they not be capable of making moral decisions? It definitely makes sense to me, and I believe that it would take a lot of stress off of decision-makers when it comes to certain things, because they would be able to focus elsewhere to complete important, critical tasks.

Comments are closed.