by Joao Fabiano

Moral philosophers often prefer to conceive thought experiments, dilemmas and problem cases of single individuals who make one-shot decisions with well-defined short-term consequences. Morality is complex enough that such simplifications seem justifiable or even necessary for philosophical reflection. If we are still far from consensus on which is the best moral theory or what makes actions right or wrong – or even if such aspects should be the central problem of moral philosophy – by considering simplified toy scenarios, then introducing group or long-term effects would make matters significantly worse. However, when it comes to actually changing human moral dispositions with the use of technology (i.e., moral enhancement), ignoring the essential fact that morality deals with group behaviour with long-ranging consequences can be extremely risky. Despite those risks, attempting to provide a full account of morality in order to conduct moral enhancement would be both simply impractical as well as arguably risky. We seem to be far away from such account, yet there are pressing current moral failings, such as the inability for proper large-scale cooperation, which makes the solution to present global catastrophic risks, such as global warming or nuclear war, next to impossible. Sitting back and waiting for a complete theory of morality might be riskier than attempting to fix our moral failing using incomplete theories. We must, nevertheless, proceed with caution and an awareness of such incompleteness. Here I will present several severe risks from moral enhancement that arise from focusing on improving individual dispositions while ignoring emergent societal effects and point to tentative solutions to those risks. I deem those emergent risks fundamental problems both because they lie at the foundation of the theoretical framework guiding moral enhancement – moral philosophy – and because they seem, at the time, inescapable; my proposed solution will aim at increasing awareness of such problems instead of directly solving them.

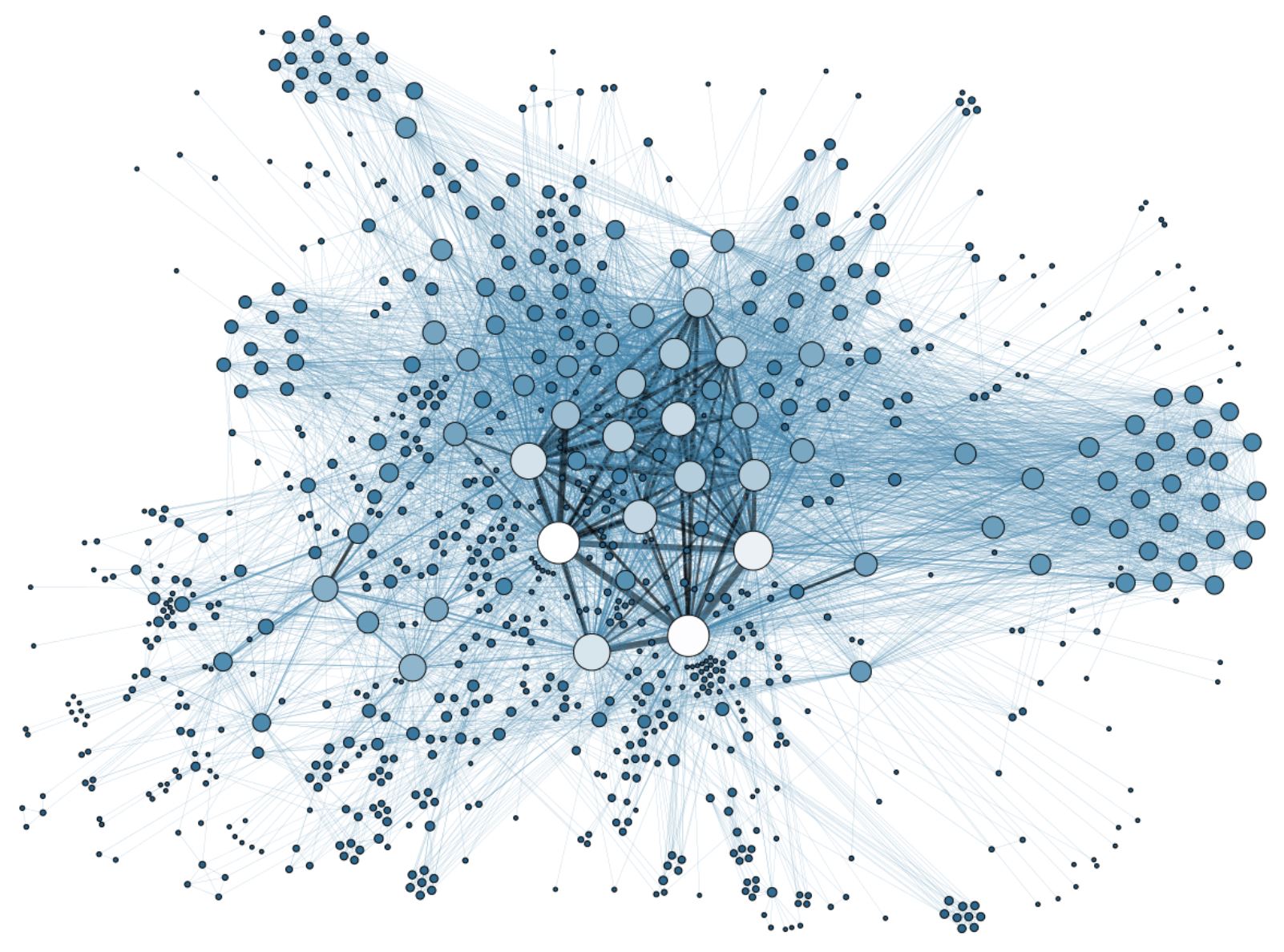

Individual-level disturbances that cause population-level effects in unexpected directions are nothing new in the study of social systems, these unexpected consequences are referred to as paradoxical effects. Moreover, in the case of moral enhancement, there are several studies pointing to the fact that one commonly proposed and relatively uncontroversial moral enhancement – increasing individual disposition towards cooperation – could have severe paradoxical effects at the population level. Empirical and theoretical studies suggest that increasing individual cooperation will be accompanied by unwanted changes in in-group favouritism. Therefore, increasing the propensity towards cooperation between individuals might lead to a decreasing in the propensity towards cooperation between groups. This is particularly worrying given that between groups cooperation (e.g. international cooperation) are central to solving global risks.

The fact that cooperative tendencies are mostly limited to in-groups is known in scientific literature as parochialism or in-group favouritism. Parochialism is the tendency to prefer to cooperate with members of one’s own group over out-groups, even if this comes at the expense of harming out-groups. This tendency is generally proportional to how much cooperative individuals are inside their own groups. Groups that are highly cooperative internally will tend to be the least cooperative with other groups. The relationship also holds in the opposite direction: competition between groups leads to increased contribution to the public good within-group and to increased group effectiveness[1]. People who tend to exhibit higher levels of parochialism, cooperating more inside their group, also have a higher proclivity to conflict with out-groups[2].

Many theories have been proposed to explain why non-kin co-operation evolved. Several of them establish that this type of co-operation could only become evolutionarily stable if it had coevolved with aggression towards out-groups. For example, Bowles & Gintis[3] attempted to model the evolution of co-operation using the best estimates regarding group-size and food-sharing during the Palaeolithic. Even when using the most unfavourable estimates to this conclusion, their results show that parochialism and co-operation could only have evolved together. Therefore, it is reasonable to assume that the brain networks responsible for these traits are strongly interconnected, and that a moral enhancement targeting only one of them would be hard to develop. As further evidence of this assumption, oxytocin – one of the drugs cited as preliminary evidence that we could one day develop a moral enhancement – seems to increase altruism, co-operation and generosity, but it is also known to produce in-group favouritism, leading to ethnocentrism and parochialism.

Another set of theories of how non-kin co-operation evolved rely not on between-group competition but on our manifested tendency to punish cheaters. We display a proclivity to punish defectors even when we weren’t the ones being harmed, and when doing so is costly. This is a tendency known as altruistic punishment. The evolution of such disposition would guarantee that even if cheaters could initially achieve higher fitness, their behaviour would eventually become too costly due to third-party punishment. Studies suggest that exclusively increasing altruism could also result in a decrease in altruistic punishment, which would decrease the incentives against cheating. When individuals become more concerned for the well-being of others, they also become less likely to want to harm them even if the violate cooperative rules.

Moreover, altruism is linked to the highly overlapping trait of agreeableness, which seems to mediate compliance with unjust orders, as explored in Milgram’s experiments. As mentioned before, altruism also seems tightly connected to parochialism. Compliance with unjust orders and parochialism are two human dispositions which have been argued to be important factors contributing to the emergence of totalitarian regimes. Totalitarianism is perhaps the closest that modern human society has ever been to the global catastrophes moral enhancement is proposed to help us avoid. Aversion to causing harm to others, a component of agreeableness, seems to be increased by raising serotonin levels, but this also has been shown to lead to a decrease in the willingness to punish cheaters – a disposition that, as mentioned, might be indispensable to human cooperation. Once again, unwanted paradoxical effects can arise from failing to account for how increasing one desirable trait at the individual level leads increasing undesirable ones at the population level.

I propose that one way of avoiding this class of risks is to focus on enhancing virtue. I contend that virtue enhancement can avoid this sort of risk by providing an empirically grounded framework, which concentrates on the practical societal effects of changing moral dispositions. For instance, not only would virtue enhancement prescribe altruism to the right extent, but also to the right social context. It would focus on increasing altruism, while also preserving our sensibility to unfair situations and the disposition to take aggressive actions to punish cheaters. Virtues can be defined as those general and stable patterns of behaviour, thought or emotion conducive to the good; this is in opposition to a focus on actual/expected consequences of actions or the fulfilling of generalizable maxims[4].

Firstly, a virtue theory is primarily concerned with the moral agent and his traits insofar as they lead to the good. Moral enhancement is primarily concerned with interventions expected to lead to better moral behaviour or motives. One obvious way of achieving this is by intervening in one’s traits leading to the good. Furthermore, as Casebeer & Churchland have extensively argued[5], a virtue theory is more likely to be heavily informed by neuroscientific data on moral traits in order to avoid ignoring the complexity of our descriptive moral psychology – which, besides reducing risks, would also fulfil the need to guarantee proper technical feasibility of moral enhancement. Finally, another defining aspect of virtue theories is to aim at the proper balance between competing moral dispositions. This would help prevent, for instance, increasing local altruism at the expense of increasing compliance with injustice, or increasing cooperation inside a group at the expense of increasing out-group conflict.

End Notes:

[1] For a meta-analysis cf. Balliet, D., Wu, J., & De Dreu, C. K. W. (2014). Ingroup Favoritism in Cooperation: A Meta-Analysis. Psychological Bulletin, 140(6), 1556–1581.; for a conceptual overview cf. Hewstone, M., Rubin, M., & Willis, H. (2002). Intergroup Bias. Annual Review of Psychology, 53(1), 575–604. doi:10.1146/annurev.psych.53.100901.135109

Bornstein, G. (2003). Intergroup conflict: individual, group, and collective interests. Personality and Social Psychology Review : An Official Journal of the Society for Personality and Social Psychology, Inc, 7(2), 129–145. doi:10.1207/S15327957PSPR0702_129-145; and also De Dreu, C. K. W. (2013). Social conflict. In Current Sociology (Vol. 61, pp. 696–713). doi:10.1177/0011392113499487

E.g. Cardenas, J. C., & Mantilla, C. (2015). Between-group competition, intra-group cooperation and relative performance. Frontiers in Behavioral Neuroscience, 9(February), 1–9. doi:10.3389/fnbeh.2015.00033; Puurtinen, M., & Mappes, T. (2009). Between-group competition and human cooperation. Proceedings. Biological Sciences, The Royal Society, 276(September 2008), 355–360. doi:10.1098/rspb.2008.1060; Burton-Chellew, M. N., Ross-Gillespie, A., & West, S. a. (2010). Cooperation in humans: competition between groups and proximate emotions. Evolution and Human Behavior, 31(2), 104–108. doi:10.1016/j.evolhumbehav.2009.07.005; Bornstein, G., Winter, E., & Goren, H. (1996). Experimental study of repeated team-games. European Journal of Political Economy, 12(4), 629–639. doi:10.1016/S0176-2680(96)00020-

[2] Sidanius, J., & Veniegas, R. C. (2000). Gender and race discrimination: The interactive nature of disadvantage. Reducing Prejudice and Discrimination: The Claremont Symposium on Applied Social Psychology., 47–69.

[3] Bowles, S., & Gintis, H. (2011). A cooperative species: Human reciprocity and its evolution. Princeton, NJ: Princeton University Press.

[4] Nevertheless, the good, in turn, might be defined as good consequences or fulfilling norms.

[5] Casebeer, W. D., & Churchland, P. S. (2003). The neural mechanisms of moral cognition: A multiple-aspect approach to moral judgment and decision-making. Biology and Philosophy, 18(1), 169–194. http://doi.org/10.1023/A:1023380907603

Moral enhancement trials are now fully underway. All are invited to TEST this newly defined spiritual, virtue ethic ideal for themselves. Search ‘The Final Freedoms’ for more information.

If we stopped trying to find the magic ‘theory’ that will solve all our ills we might find it easier to do ethics and think about the issues that concern us.

Having stopped trying to theorise about ethics as if it were a science, we might realise that the notion that we should be morally enhanced by some kind of technological fix is at best absurd and at worse could irrevocably destroy our capability to doing ethics.

Finally, there is not living human being who has sufficiently reliable knowledge and understanding of the Palaeolithic period to ‘theorise’, in any meaningful sense of the word, as to whether parochialism and co-operation could only have evolved together. But even if we were to make a highly speculative hypothesis that they did evolve together, you cannot seriously make further speculations about “brain networks” to support your belief in enhancing virtue.

” there is not living human being . . . ”

Agreed, and while I have only been studying this material for a short period and am now testing this insight for myself, I should mention that this TEST, although obviously presented in a form that human reason is able to digest, it cannot be, by the very nature of the intended results, of human intellectual origin.

Comments are closed.