Written by Darlei Dall’Agnol[1]

I attended, recently, the course Drones, Robots and the Ethics of Armed Conflict in the 21st Century, at the Department for Continuing Education, Oxford University, which is, by the way, offering a wide range of interesting courses for 2015-6 (https://www.conted.ox.ac.uk/). Philosopher Alexander Leveringhaus, a Research Fellow at the Oxford Institute for Ethics, Law and Armed Conflict, spoke on “What, if anything, is wrong with Killer Robots?” and ex-military Wil Wilson, a former RAF Regiment Officer, who is now working as a consultant in Defence and Intelligence, was announced to talk on “Why should autonomous military machines act ethically?” changed his title, which I will comment on soon. The atmosphere of the course was very friendly and the discussions illuminating. In this post, I will simply reconstruct the main ideas presented by the main speakers and leave my impression in the end on this important issue.

In his presentation “What, if anything, is wrong with Killer Robots?,” (for those interested, a forthcoming paper), Alexander Leveringhaus started by trying to define a robot as an artificial device, embodied artificial intelligence, that can sense its environment and purposefully act on or in that environment. Despite the fact that this may not be a clear-cut definition, most military robots, for example, The Dragonrunner Robot, The Alpha Dog, The Predator Drone (MQ-1), Taranis, Iron Dome, Sentry Robot etc. fit this definition. He then argued that the main objection against using autonomous weapons in an armed conflict is not that there is an accountability gap between programming and operating these tools and what they will effectively do, but instead the real issue is whether the imposition of such risk can be justified. He discussed on whether the use of unsafe weapons means that the operator is culpable and whether the justified use of a killer robot means that the operator is still responsible, though not culpable. Leveringhaus, then, relying on a distinction between intrinsic and contingent reasons (the former means that whether X is desirable depends on a number of factors, while the later says that X is desirable irrespectively to any other factor), presented his Argument from Human Agency against using killer robots. Briefly, the argument was intended to show that the deployment of such weapons would be intrinsically wrong even if it was not excessively risky. Leveringhaus held that what is valuable about human agency is the ability to do otherwise, for instance, a soldier can out of compassion to choose not to shoot a target in particular circumstances even if he was ordered to do so. If robots are programmed to kill, they will just do that since they lack this agency. That is to say, they lack the ability to do otherwise, which is preserved in the soldier’s case. He then concluded that we have reasons not to deploy semi-autonomous killer robots.

In his talk “Delegating Decision Rights to Machines”, Wil Wilson discussed whether it is ethical to allocate decision making to robots, particularly, in armed conflict contexts. He started by pointing out that apparently there is no substantial difference between whether the agent is a military or an autonomous weapon: both may, for instance, mistakenly identify targets; both may take the law into their own hands etc., etc. He then pointed out that we have strong reasons, for instance the huge amount of information data which nowadays makes almost impossible for humans to handle it in due time, to delegate to such machines the decision what to do. Now, in order to answer whether it is ethical to do so, he presented several criteria to judge whether the armed conflict is justifiable: just cause, military necessity, probability of success, discrimination, right intention, competent authority, comparative justice, proportionality, not ‘evil in itself’ and last resort. Unfortunately, he did not give many details on how to apply all these criteria in the case of armed drones or killer robots. However, he did hold that since there is no substantial difference between what people do and what robots would do, there is no reason not to employ autonomous weapons in armed conflicts. He then finished his talk saying that the use of humans or autonomous war machines is just a pragmatic question, that is, it revolves around who has more chance to succeed.

I will not comment on or critically assess the two speaker’s views. It seems clear that they did not disagree on whether it is ethical to employ killer robots in particular wars despite the fact that, if I understood them correctly, Leveringhaus does want to preserve always a human agent “in the loop” while Wilson was much in favour of delegating decisions to automatic killer robots. Instead of taking a side in this debate, I will, for those interested in reading more about the subject, just reproduce the suggested literature for the course (see below).

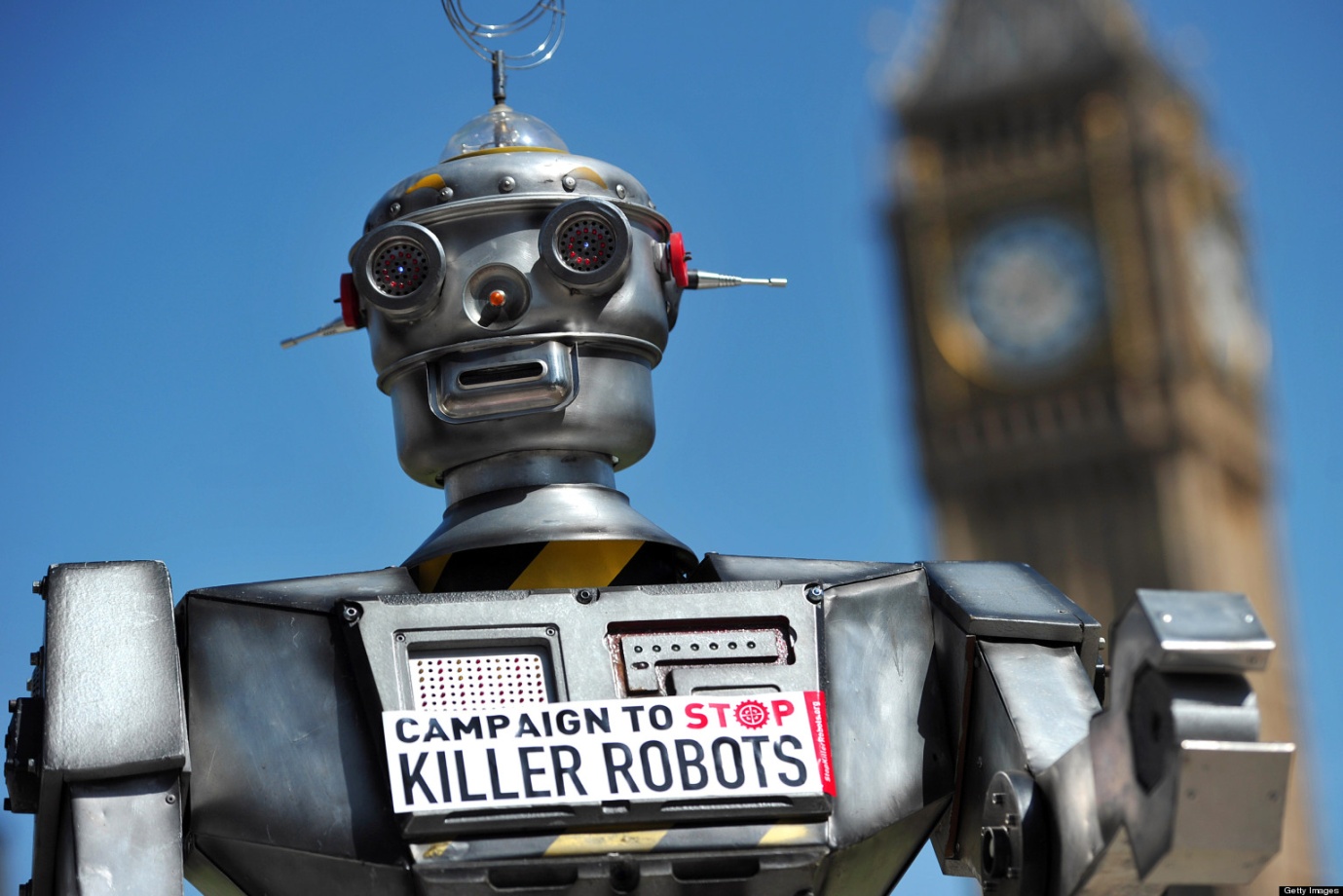

I will now present my own views on this subject, which were anyway verbalized in the “question and answer session” of the course. What I said and still believe is that we have, above all, to bear in mind the changing nature of wars of the 21th century. That is to say, a war between two or more states deploying atomic bombs is nowadays simply irrational since it may lead to annihilate life on this planet. This was the main concern of the so-called cold war. The same apply to wars using chemical and biological weapons with the potential for mass destruction. Now, I have the impression that the development of “robotic wars”, if they are not just games, will turn out to have the same character. In other words, they will be so destructive of human life that their deployment will be irrational. What is the point of preparing a war, say between United States and China, using millions or even billions of killer robots if it could lead to mutual destruction? Thus, I believe that we simply must “give peace a chance”, not any momentary or local peace, but perpetual, world peace. Thus, my suggestions to face the danger killer robots bring are: first, we all must endorse initiatives such as Human Rights Watch call for a pre-emptive ban on killer robots (http://www.stopkillerrobots.org/) since current warfare technology is de-personalizing and de-humanizing the killing process in such a degree that terminators will soon be not only science fiction, but perhaps our real enemies; second and in order to make effective the ban on killer robots (and indeed atomic bombs, chemical and biological arms of mass destruction etc.), why not to reform transforming UN’s Security Council into the only authority over the use of military force on earth abolishing all national armies? Thus, armed drones could still be used for military purposes under such authority, for instance to combat terrorism, but not killer robots. That is to say, armed conflicts, but certainly not wars between states, would perhaps still exist in the 21th century in very special circumstances. However, they will not have the potential to kill millions of innocent people or even to represent an existential treat to mankind and other forms of life.

SUGGESTED READING

Arkin, R.J., ‘The case for ethical autonomy in unmanned systems’, Journal of Military Ethics, 2010.

Kaag, J & Kreps, S., Drone Warfare Polity, 2014.

Menzel, P & D’Aluisio, F., Robo Sapiens, MIT Press (Cambridge, 2000)

Robinson, P et al., Ethics Education in the Military (Ashgate Publishing 2008)

Singer,P.W., Wired for War: The Robotics Revolution and Conflict in the 21st century (Penguin, 2009).

Smith, R., The Utility of Force (Penguin, St Ives 2006)

Sparrow, R., ‘Killer Robots’ (Journal of Applied Philosophy, 2007).

Strawser, B.J. (ed.) Killing by Remote Control, (OUP, 2013).

Time Magazine: Rise of the Robots, (Time inc. (specials) New York 2013)

Wallach, W & Allen, C., Moral Machines – Teaching Robots Right from Wrong (Oxford University Press 2009)

[1] Professor of Ethics at the Federal University of Santa Catarina. I would like to thank CAPES, a Brazilian federal agency, for supporting my research at the Oxford Uehiro Centre for Practical Ethics. I would like also to thank Alexander Leveringhaus and Wil Wilson for making available the power points of their presentations.

The attraction to killer robots is the nationalistic idea that our soldiers are more valuable than other people’s soldiers. It’s blatant discrimination that is playing out in other avenues as well. When Obama said there would be no boots on the ground against Isis, but instead it would be Syrian and Iraqi soldiers risking their lives (in a conflict that we basically created the conditions for as well as significantly benefit from), basically he was saying that American troops are more important and their lives shouldn’t be risked, but their soldiers lives (who aren’t as well trained, and significantly fewer resources to aid in their defense) are worthy of being put in harm’s way.

We need the cost of war to be high, so that people refuse to fight them. When the cost of the US military is already in the hundreds of billions of dollars, financial costs are no longer deterrents to fighting a war, only the cost of lives become deterrents. So we need to put more lives at risk, in order to fight fewer wars. With the advent of autonomous/remote weapons platforms, it’ll be more likely that we engage in more wars with less deterring costs.

My concern with drones and killer robots, and anything else that means that increases the asymmetry between potential invaders and the folks who are attacked, is that it makes “intervention” much more likely. If you’re a big country, and you want to make a small country toe the line (on whatever grounds), then you’ll take the costs of intervention more seriously if bodybags come home to your country. If “humanitarian” intervention is costless, you’ll see a lot more of it. And most intervention is, to a value pluralist like me, a very bad idea. So the concern for me is less the rise in killer robots, and more the profusion of interventionists such as Tony Blair. As the costs of intervention drop and the other payoffs remain the same, expect more intervention.

I’d highly suggest reading some RAND stuff from ~2013 on this.

The first major issue the author has is in demonstrating that a computer / robotic program is in anyway different from a biological training program in terms of conditionals. i.e. Military production of “killing machines” since WWII has been very much about two things: reducing the biological (human) resistances to killing (c.f. % kill shots taken by soldiers on the field ~ up to 98%, go humanity!) and creating heuristic flow-charts of engagement that allow all scenarios to be modelled and to conform to said models.

Pretending that a professional military at this point is somehow different from a robot is rather to mistake the form / material for the logos. (That’s without the kill-cams from hightec heli’s playing in the background).

Hint: they’re identical at this point (to a % difference where one falls under “failure to obey orders” and the other “system failure”.

Added to this, a “killer robot” is a total McGuffin. Drones etc are just the fluff, we’re a long way from Kansas now. You’re wanting to say “autonomous program that has material connections that can produce death”. In which case, say hello to your Google Car or your local HFT that just dumped a Central American economy in the dirt to make 0.01 c on the dollar or the software that shows adding PBCs to cans saves you $0.01 at the cost of x thousand cancers or…

You get the point.

Simply put: you cannot have an ethical critique of “killer robots” unless you know what algos are doing.

Shooting rockets at poor brown people is rather missing the point. (Hint: a drone can kill, say, 150 people at a wedding with a couple of Hellfires; a HFT can kill a few million by distabilising an entire food market).

MEDIOCRE.

A. Wilson wrote me an e-mail making a further clarification on his views I would like to post here: “I had hoped to get the message across that my stance is that I would want to see decisions rights allocated to the most effective agent – whether that is a machine or a human – and that I am not so much in favour of this as I accept that this is the way things are going so we must think about it if we want to influence it.”

Comments are closed.