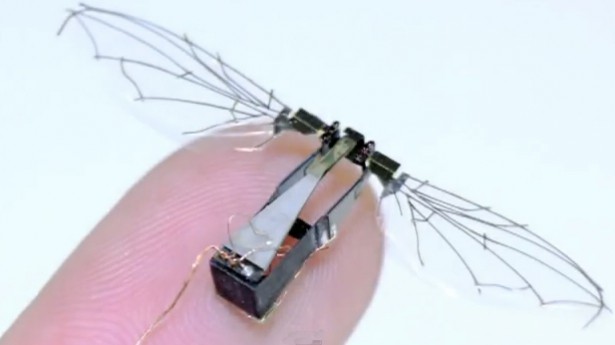

BBC News reports that Harvard scientists have developed the world’s smallest flying robot. It’s about the size of a penny, and it moves faster than a human hand can swat. Of course, the inventors of this “diminutive flying vehicle” immediately lauded its potential for bringing good to the world:

1. “We could envision these robots being used for search-and-rescue operations to search for human survivors under collapsed buildings or [in] other hazardous environments.”

2. “They [could] be used for environmental monitoring, to be dispersed into a habitat to sense trace chemicals or other factors.”

3. They might even behave like many real insects and assist with the pollination of crops, “to function as the now-struggling honeybee populations do in supporting agriculture around the world.”

These all seem like pretty commendable uses of a new technology. Yet one can think of some “bad” uses too. The “search and rescue” version of this robot (for example) would presumably be fitted with a camera; and the prospect of a swarm of tiny, remote-controlled flying video recorders raises some obvious questions about spying and privacy. It also prompts one to wonder who will have access to these spy bugs (the U.S. Air Force has long been interested in building miniature espionage drones), and whether there will be effective regulatory strategies capable of tilting future usage more toward the search-and-rescue side of things, and away from the peep-and-record side.

This is a general problem. Indeed, most new technologies could turn out to be double-edged swords: mini-drones are just one salient example. To give another one, I’ve recently been grappling (with my colleagues Olga Wudarczyk, Anders Sandberg, and Julian Savulescu) with the ethics of another imminent technological prospect that might be used for “good” or for “evil” as well — namely “anti-love biotechnology.” This term refers to neurochemical interventions that could be used to diminish potentially harmful forms of love or attachment, like the addictive love a domestic abuse victim might feel for her abuser, or the attraction someone with pedophilia might have toward small children.

It could also be used for illiberal or even immoral ends. Just imagine a kind of high-tech conversion therapy, and then think of the religious fundamentalists who might be all too eager to get their hands on it. Scary possibilities such as these make the philosophy of the luddite seem instantly appealing.

But we have to think such matters through a little bit more carefully. In the first place, we have to remember the potential harms that might accrue from the misuse of any new technology must be weighed against the potential benefits that might accrue from its responsible use. It might be that the prospective harms outweigh the benefits, but this kind of careful weighing-up has to actually be attempted.

In addition, as Nick Bostrom and Rebecca Roache (2011) have argued, it is not enough simply to run the calculations.We also have to give a serious effort to identify “potential supporting policies and practices that can alter the balance for the better” (p. 144). This is true whether we’re talking about tiny flying drones, anti-love pills, or anything else. The philosopher C. A. J. Coady (2009) has articulated a similar view:

If indeed there is insufficient knowledge of outcomes and consequences, or no social or institutional regulatory regime for prudent implementation of the innovations and for continuing scrutiny of their effects, or no room for overview of the commercial exploitation of the innovations, then … critics [of new technologies] clearly have a point. [But] warnings can be heeded. [We can] insist on safeguards and regulation, both scientific and ethical. (p. 165, emphasis added)

So what’s the lesson here? Yes, we have every reason to be afraid of flying camera bugs lurking on the walls of our bedrooms. And we might think that anti-love biotechnology is nothing more than a disaster waiting to happen. But both of these technologies, along with so many others–coupled with the right kind of regulatory structures, monitoring regimes, and safeguards–might bring about much more good than harm.

The real work, then, is not to put a universal stop sign in front of double-edged innovations. Instead, it is get out ahead of them with clear ethical thought and effective legal protections. Let’s get cracking on this important work!

References and resources

Bostrom, N., & Roache, R. (2011). Smart policy: cognitive enhancement and the public interest. In J. Savulecu, R. ter Meulen, & G. Kahane (Eds). Enhancing Human Capacities. Oxford: John Wiley & Sons, 138-152.

Coady, C. A. J. (2009). Playing god. In J. Savulecu & N. Bostrom (Eds). Human Enhancement. Oxford: Oxford University Press, 155-180.

Earp, B. D., Sandberg, A., & Savulescu, J. (in prep). Powerful technology and problematic norms: The looming threat of high tech ‘conversion therapy.’ ** Some of the material in this blog was taken and/or adapted from this in-prep paper.

Earp, B. D., Wudarczyk, O. A., Sandberg, A., & Savulescu. J. (under review). If I could just stop loving you: Anti-love biotechnology and the ethics of a chemical breakup. [LINK]

Earp, B. D., Wudarczyk, O. A., Foddy, B., & Savulescu, J. (under review). Addicted to love: What is love addiction and when should it be treated? [LINK]

———————————————

See Brian’s most recent previous post by clicking here.

You seem to have overlooked much more basic immoral ends to which such tiny flying critters could be directed. Such as: being mass-produced in their millions, then loaded with deadly venom (or virulent diseases) and being programmed to inject them into humans, before being released over “enemy territory”. Tiny robots could well prove to be the most effective weapo0ns of mass destruction.

Good point, Nikolas — there are probably other immoral ends as well. What do you think is the appropriate way to head off such scary possibilities?

International agreements on morally defensible use of tiny robots seems the obvious way to go. When such things will be needed and how they effective they’ll prove to be, only time will tell 🙂

I agree that these calculations need to be attempted. The problem is that probabilities of the numerous outcomes are extremely difficult to estimate, and the margins of error so great that it can become meaningless. Various episodes of Star Trek come to mind ….. and the Prime Directive! I would argue that we have much less control over what we create than we imagine, especially in the future. How often do international agreements and laws actually work? This would need to be factored into the overall calculation of the possibility of harm.

Good post. Just wondering could this be regulated under the recent Small Arms Trade Treaty http://www.un.org/disarmament/ATT/

Identifying the legal regime under which this new technology will be governed is challenging. It may exist but like all law, its difficult to keep place with technology. Often it is only after the technology is deployed and used destructively does the issue of regulation come into play. If you look at the history of the use of nerve gas or various types of landmines, it was only after the fact that new laws were developed to address the consequences. Look today at the problem with trying to regulate the use of drones by governments when it is not covered under the Laws of war.

The use of drones by states during peacetime is inevitably going to be more complex. The selling and buying of this surveillance type technology might be better regulated by laws other than human rights or IHL treaties. International Humanitarian Law seems slow to keep up with these developments. I think it would be good to separate their use between military use, therefore IHL and commercial or State use in peacetime, which might include human rights law but also a whole other side of national law and public international law.

The ethical issues may be separate as well. For instance governments military are far more circumspect about using weapons as a method of combat. Questions of proportionality, necessity and the ability to distinguish between combatants and civilians come into play and I think are taken much more seriously, than for instance the civilian use for surveillance reasons. Ironically, the boundaries of state security are far more blurred in law than in during a conflict.

Anyway, it would be a good project to look at!

Good post and interesting to read. I am just wondering could the recent UN Small Arms Trade Treaty cover some of the ground suggested by your post?

The ICRC regularly review the use of various weapons in combat and tries to apply International Humanitarian Law to the means and methods of warfare. Proportionality, distinction and necessity are some of the principles used; however, IHL is notoriously slow in keeping pace with developments in new technology. If you look at the history of war it is usually after the fact that regulation comes into consideration, for instance the use of nerve gas or types of landmines had been the technology that outpaced law at that time. The current problem of finding international laws to cover the use of unmanned drones in areas that are not technically zoned as an armed conflict and are controlled not by a military body but some other wing of government are particularly complex. To say the least they are mischievous in the way governments seek to manipulate international law.

Having seen your post I had a look back over William H. Boothby’s work on Weapons and the Law of Armed Conflict (2009) and he does indeed cover the question of how new technologies are addressed by older treaty law. Military people are quite conservative and cagey about re-opening treaties or providing new protocols that might dilute the original texts. On that point Boothby wrote, ‘It is not excessively melodramatic to point out that the existing provisions in the law of weaponry have been written in the blood of past victims’ (p. 366). Goes to show the consequence of what occurs when technology outstrips law.

During peacetime, human rights law too has struggled to first comprehend and then respond to state security laws, designed to limit the effectiveness of unwelcome threats. Finding the balance between security and liberty over the last decade is strikingly frustrating, especially when governments started using the lingo of “unlawful combatants” and a globalised “war of terror”. Nils Melzer, ‘Targeted Killing in International Law’ (2008) goes a long way in clarifying the law for Law Enforcement agencies and is good on the issue of ‘Effective Control’. Even if law enforcement were to use tiny drones for operations, I can only surmise that the law would follow back to the source of those in control of such technologies.

Designing ethical principles will be challenging because this type of law is mired in politics and power. IHL is overarched by Jus Ad Bellum and IHR is overarched by state sovereignty. There is plenty of groundwork in both bodies of law but there is of course the problem of keeping up or the unfortunate experience of waiting for new technology to be deployed by a military or law enforcement agency and then react.

The bioethical sphere is virgin territory to me! It uses un-clarified language like “dignity” which puts my blood pressure up. I wish you well in your forays there and look forward to any finding you might uncover.

See

M Wheelis and M Dando, ‘Neurology: A case study for the imminent militarisation of biology’ (2005) 859 IRRC 553

Schmitt, Michael N., Drone Attacks Under the Jus ad Bellum and Jus in Bello: Clearing the ‘Fog of Law’ (March 2, 2011). Yearbook of International Humanitarian Law, Forthcoming. Available at SSRN: http://ssrn.com/abstract=1801179

Comments are closed.