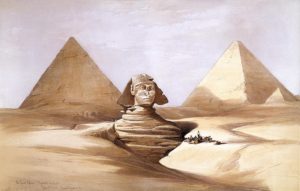

Image: The Great Sphinx and Pyramids of Gizeh (Giza), 17 July 1839, by David Roberts: Public Domain, via Wikimedia Commons

Words are powerful. When a word is outlawed, the prohibition tends to chill or shut down debate in a wide area surrounding that word. That tendency is much discussed, but it’s not my concern here. It’s one thing declaring a no-go area: it’s another when the mere use or non-use of a word is so potent that it makes it impossible to see something that’s utterly obvious.

There has recently been an excellent and troubling example. Some museums have started to change their labels. They consider that the use of the word ‘mummy’ demeans the dead, and are using instead the adjective ‘mummified’: thus, for instance ‘mummified person’ or ‘mummified remains’. Fair enough. I approve. Too little consideration is given to the enormous constituency of the dead. But using an adjective instead of a noun doesn’t do much moral work.

Consider this: The Great North Museum: Hancock, has on display a mummified Egyptian woman, known as Irtyru. Visitor research showed that many visitors did not recognise her as a real person. The museum was rightly troubled by that. It sought to display her ‘more sensitively’. It’s not clear from the report what that means, but it seems to include a change in the labelling. She will no longer be a ‘mummy’, but will be ‘mummified’. She is a ‘mummified person‘: She’ll still remain in a case, gawped at by mawkish visitors.

The museum manager told CNN that he hoped that ‘our visitors will see her remains for what they really are — not an object of curiosity, but a real human who was once alive and had a very specific belief about how her body should be treated after death.‘

Let that sink in.

Whoever Irtyru was, she did indeed have a ‘very specific belief about how her body should be treated after death’. It did not involve lying in Newcastle, causing school children to scream. To describe her as ‘mummified’ rather than ‘a mummy’ does nothing whatever to address the offence of displaying her in a way wholly inconsistent with that ‘very specific belief’. That the museum apparently thinks it does is a symptom of moral blindness. There is a real issue about the display of Irtyru: it is not addressed by tweaking a word. More worrying is that that tweak seems to render invisible the very moral issue it purports to address. I’m not saying that Irtyru shouldn’t be displayed: I am suggesting that changing a word is no substitute for proper deliberation – let alone real change.

This is an example of a more general and sinister malaise. Virtue signalling has taken the place of serious, difficult ethical discourse.

Very interesting. This is part of a more general trend, where significant changes in language are imposed top-down (more often from academic departments). Leaving aside that this is not how language works, as language serves pragmatic communicative purposes and not the ethical or political aims of those working in academic departments or museum committees, these kinds of attempts are problematic for another reason. Imposed changes in language like these often carry with them a moral or political connotation that challenges the moral or political views of many, if not most, language users (that is, of everyone of us). Examples of this phenomenon abound, especially in American universities recently. If you impose a change in language top-down, what you are asking people to do is to simply assume such moral or political connotations as part of the meaning of words, that is, as something that remains implicit in language use. This means that you are asking people to bypass the stage where such moral or political views would need to scrutinised, discussed, and accepted or rejected by language users (everyone of us). As you rightly say in this case, for instance, the issue of whether mummies should be displayed in museums is worth discussing, but by trying to incorporate the answer into the imposition of a new term, one simply makes the issue disappear within the implicit meaning of words.

Fortunately, the two problems somehow cancel each other out, as far as I can see. That is, because this is not how language works and evolves, these kinds of top-down attempts are destined to fail. People will likely keep using the term ‘mummy’ in everyday conversations, no matter what museum curators do. It might not be true for those working in academic departments, but it is true for most people outside of these. The former have far less authority over language than the latter. As for the moral issue itself, it will be more apparent and more likely to be properly scrutinised if we keep using the word ‘mummy’ to refer to mummies.

Alberto: yes indeed. Top-down edicts about how language should be used tend to stop discussion. And even if they don’t stop discussion dead, they (as described in the post) make invisible the elements that should be at the centre of any meaningful discourse.

Such edicts betray many types of insecurity. They indicate a lack of confidence in the whole process of dialectic, for instance. That’s a good reason why professional philosophers should rail against them.

I suppose the rage to sanitize and make things politically correct will expand, unabated. I guess we saw it all coming. Still, axiology and deontology can be taken to extremes. I count on this blog to let me know all it knows, good, bad and indifferent.

The phrase “Mummification and Moral Blindness” might have been “Mummification and Political Correctness“. Moral blindness is a loaded expression that will have cultural and personal or social connotations contingent upon private value judgments. Moralists will not like that approach as they are concerned to be seen as “normal” and just like other people—sharing a group/social norm. However, the group norm idea is a fiction/myth.

We may pay lip service to such groupness even though we override it frequently with our own personal values. We say, “X is morally right. Everybody knows that.” Everybody does not know the same values. For that to be possible we’d have to share the same emotional and behavioral experiences. That is highly unlikely.

We don’t play well together. Some will try to deal with that by reference to commandments and linguistics. This approach is a sideroad to nowhere. I hope we find a better way to play together. There is only one real group, and it is humanity. I hope but I do not expect that humanity will achieve this humanity level affiliation.

This Black MASTERS are going to mumifield you very extremely like never before Clingfilm you very extremely then tapped and band your whole body then hang you up like this to be in the middle of all your Masters

Thinking more about this, thoughts crossed my mind upon hearing of another slaughter of a black person. In Memphis, Tennessee. It is not only white police who are killing black ‘suspects’. More and more, blacks are being killed for being black—by whomever the killer happens to be. Once upon a time, a black man, who had recommended me for hire into his office, chastised me later for questioning his judgement and authority, calling that ‘attitude’. We became friends, as far as such friendships go. He ended up losing his position, largely do the political influence of a white woman. Years later, he stood accused of the murder of his second wife. If he is still living, he is now in his eighties. I don’t read newspapers or follow current events but will always respect the man I once knew. That is all I am willing to do. I owe him nothing more, nor he, I. Whether mummification is now verboten does not concern me. It is not, after all these centuries, a ‘ practical matter’. Indiscriminate, or, discriminate killing is.

Larry: thank you. But ‘Mummification and political correctness’ doesn’t capture the full mischief.

I say in the post that I share the sensibility that’s behind the proscription of ‘mummy’ as a noun. Call me politically correct if you please. My concern is wider and deeper: not just with cancel culture, but with the presumption that a simple linguistic change exhausts our ethical obligations, and excuses us from thinking hard.

Thank you, Charles, Amen to your comment about the linguistic change presumption. Symbols are linked to emotions. Treating emotions with symbols is like using acetaminophen and aspirin to treat my peripheral neuropathy. Can’t get deep enough. Keep thinking hard!

I think, though am not certain, all points are valid as framed. Mr. Foster: the first sentence of your post sets primary emphasis. Three words, and about as concise as it gets. Larry and I think alike on many issues and topics. When we disagree, we disagree and, sometimes we even change our minds. Meanings change, as new semantic applications emerge with cultural and sociological re-organizations. Traditional meaning has not really changed if we are discussing ethics and morality. But, modern approaches and emphases (?) have, ergo things like cancel culture and revisionism replace or subsume traditions. Seems to me, anyway.

In this discussion thread there is a growing, probably unintentional, limitation of thought. The article clearly indicated a wider issue which required careful and difficult thought. Like simple closed versus open question debates, the issues become most clearly illustrated in the differences between outcomes.

Museums differentiating between the words applied to ‘exhibits’ does appear as one of – illustrate the difference. But equally in this case the wider moral issues could become even more obvious to a wider audience if both words were contrasted within the information provided about an exhibit at the museum, rather than focusing any perceived moral issues into single words (political, language correction or philosophical). I would also suggest a museums attempts to provide environmental and cultural insights (for themselves and their visitors) are promoted by bringing the mummy into being as the mummified remains of an individual. So there does exist an element of potentially comprehensive moral blindness at this stage which is not (fully) recognized within the museum context. (More on that deliberated observation in a moment.)

As to this discussion thread:- Most of the writing, because the discussion has singular subjects (mummies) who were living human individuals, ignores the wider environment and cultural context within which the people they were lived. Starting with the museum managers comments, the linked object becomes limited to the individuals life and remains, yet the individuals beliefs would probably have included issues such as their body remaining in their final resting place, so an understandable source of moral tension (exact point and type depending upon constructed/accepted worldview) probably exists for museums at that point.

Secondly socially individuals and social groups do not live only within themselves. The mummies existed within a wider environment something the modern world appears to be partially moving away from acknowledging. (Surviving communications/structures/choice of lived environment from earlier times often reveals a surprisingly wide appreciation of those other areas.) Whilst humanity itself may be perceived as one social group it is influenced, and its actions are somewhat determined by the wider environment and other denizens. Limiting discussions to the human arena limits moral thought in a similar way. And that is partially the limitation I mentioned at the start and which, because of todays applied focus, has so far caused a blindness to the broader influences upon the mummy’s original living beliefs. A set of moral beliefs formed and contained within a society which itself created internal social groups whose function was to brutally mummify the remains of the dead and put them in a place of rest which was eventually often guarded against any disturbance by grave robbers and such, who may disturb the hoped for peaceful afterlife. It is possibly a recognition of that which causes the museums conscious re-wording of the information regarding mummified ‘exhibits’ (probably a forbidden word for mummies) to the public because that re-wording facilitates the creation of some celebration of the life and works of the deceased individual concerned whilst at the same time benefiting the museum and its members by illustrating their own knowledge and apparent empathy, and can also be rationalised as morally praiseworthy because it can be seen to celebrate the life of the mummified individuals place in their currently perceived historical context and who, it may be argued, would have been pleased with that outcome. Respect becomes displayed. Today such a situation may be seen to be an ethical response to found material fitting with existing circumstances which respects the individual even though it can be seen to deny the ethical and moral world the mummies respected, lived and died in. Which ethical set of rules should be applied and why; and today which rules in the various moral sets, if any, should have precedence. That appears as the larger question being raised by the article and is what is mainly ignored by the focus upon words and a set of physical remains by a world which has lost appreciation in the integrity of the beliefs of those dead peoples.

N.B. My apologies to Charles for this but it does seem allied to the article. A great deal of meaning is contained within the content of words which requires itself to be known if what is being said is to be comprehended, and whilst speaking of word use and the limiting of moral thought by the trite use of words – I have been looking for a word which reflects the singular use of systemic thought processes which limits thinking within one sphere/worldview/perspective/philosophy (irrespective of subject). A sort of blinkered or tunnel vision arising from and then applied as the only focus for the subject (something sometimes referred to as providing the true answer) Almost an opposite to holistic thought. I have been using a made up word – systemacity, but ask if anybody knows an existing single word used appropriate to this.

After my earlier comments I made the following notes to myself as part of my own research:-

“This illustration of moral progress (my response to the article) and how it may occur hinges upon the inquisitive nature and thoughtful reflection about others.

i.e. object, artifact, exhibit; mummy; mummified person.

However such individual actions become constrained within the social group and boundaries surrounding progress during the particular era in which they occur possibly thereby producing the qualitative/quantitative debates previously referenced in the earlier (2022) linked to note.

e.g. Broad considerations of the outcome of the application of the current moral frame to bodies from the past are revealing. A king would be most infuriated by such demeaning treatment but a slave would be conflicted or flattered. Yet in both cases today’s ethics/morality would feel piously justified.

Any privacy expectations in both of those examples (dead king or dead slave) would not be reflected in any action, and so not respected. And that appears as a point which is most reflective of the predominant morality in today’s era. [Foster, 2023 #21483]”

Later I switched on the UK news and was confronted by the coverage about British Gas and their methods of recovering debts from vulnerable people by installing pre-paid meters in the depths of winter during the current fuel price crisis. That is an excellent illustration of the systemic moral/ethical failures being perpetuated within UK culture, which were indicated by this article and my comments about failing to appreciate the integrity and beliefs of long dead people (mummies) by applying current moral codes which are self-serving. The result of which is a total ignoring of any privacy type expectations, which exhibit some respect, for the individual within the environment they occupy.

That failure was not merely British Gas or the courts, the whole system of protections for individuals failed. Yes, yes, mainly because of political actions in various spheres which created this lack of any resilient ability to cope or provide, but that only serves to strengthen the ethical/moral point being made.

A quotation by Hegel reveals a similar type of social issue:-

Take the famous adjuration of Plato to the stars in the lines:

When thou gazest forth at the stars,my star,

Would that I were the heavens and thence on thee

Could gaze forth out of a thousand eyes.

Considering privacy issues even with todays increased transparency the paradoxical messages contained in the context Plato formulated those words, like the situation of mummies, become very one-sided.

i strongly believe that culture must be preserved. when you take note into some of the lines in the article some points are valid. just like the catholics use mary and joseph in the churches

Consider the possibility that cultures and religions are detrimental to human adaptability. Although they were useful for group control during the tribalism period, they now are driving humanity to extinction. They encourage humans to think they can be lazy and get away with it.

To me, there is more than a little evidence of tribalism’s resurgence. Those of us who have been around long enough remember what it looked like in the 1960s. It was mostly a kinder, gentler tribalism, with communal living experiments and other constructive features. This positive trend went south, amid the struggle for equality, civil rights and equal justice under law. Anarchy and mob rule, vestiges of tribal life prior to authoritarian governance, are resurfacing accompanied by conspiracy theory and misinformation. Professors of the social sciences, and some of their young proteges have reason for worry, while government and its’ $taunch $upporters play down threat(s) and consider allegations knee-jerk nonsense. I think LV is pretty spot-on in his assessment. There is a razor-thin distinction between laziness and complacency. It has taken me some time to understand the word, authoritarian. Authoritarian implies enactment and enforcement of law. Anarchist does not. I agree with preservation of culture, insofar as that does not obliterate civilization.

Without widespread cooperation, civilization is not civilized.

To be sure. Key qualifier. Sorta like the old philosophy caveat:…’if, and only, if…

culture, tribalism, authoritarianism; anarchy are all social group words.

Each of those words also indicate elements struggling to attain some form of agreeable stability for themselves – achieving a limiting yet stable definition becomes the objective, ignoring an acknowledged constancy of change in the drive for consensus achieved by a narrowing focus of understanding. This arises in Paul’s comments.

Taking the words so far deployed in the debate and democratic systems can allow all types of social groups and philosophies to function within them, provided none pose a realistic threat to the whole. Theory used to indicate democracies eventually degenerate into dictatorships, perhaps that type of outcome sometimes becomes facilitated when a progressive authoritarian element seeking to achieve a more comfortable fit for itself, expresses its frustration that not everybody fits by looking to widen the membership of its own worldview, even when not agreeable, or stable for the whole. But this is not what this article is about or where it was going.

An acknowledgement of a dead individuals cultural expectations is, but even that documented wish was influenced by today’s cultural expectations which have changed since mummification was de jure within Irtyru’s social group.

Rules based authoritarianism, the old punchbag of political correctness, and anarchic thought all mask a basic narrowing of acceptable outcomes, thereby emasculating the potential for broader comprehension. Ergo Larry’s later comment. Comparing the developments over museum mummies and applying that ethical/moral dynamic to the gold leaf coated mummified body of a dead priest on display as a prayer object in the Temple of a thousand budhas in Hong Kong (if memory serves correctly), and a flexibility of values can be seen to be required if wider social group ethical/moral beliefs are to be inclusively comprehended rather than adapted by an understanding applied from within any particular culture or social group. Think ethnological methods here.

Applying the same considerations to something closer to the present day like the dead from today’s armed conflicts or natural disasters, and the application of broader considerations containing reasons which further confront and lead back into the discussions above demand a necessity to at least respect the individual level, including the lived environment/beliefs/changing expectations where possible. Unless a particular ethics/morality is culturally moribund surely that is of importance to the comprehension of the subject area.

It doesn’t usually take me five hundred words to convey what I want to say. So here it is: In my opinion ethics and morality are moribund. Or, for ease of reading, dead. Having already said this, I iterate something I have wrestled with for years. Complexity is collapsing under its’ own mass. I have likened this to a figurative black hole, subsuming everything it touches. This is longview historiography. One or two current thinkers get some of this. However, even they are limited by their interests, preferences and motivations. Possibly even hope. Which is strategic, in winning hearts, if not minds. I wish rhetoricicians good luck. Thankfully, you and I will be way dead, when the shit hits the fan.

Thinking of a saying by Sidgwick who observed in his Methods of Ethics, “It may, however, be possible to prove that some ethical beliefs have been caused in such a way as to make it probable that they are wholly or partially erroneous: and it will hereafter be important to consider how far any Ethical intuitions, which we find ourselves disposed to accept as valid, are open to attack on such psychogonical grounds.” and an immediate response to your espoused comment comes to mind which involves decision making. Each decision involves a right outcome and a wrong one. Right and wrong. Good and bad. A choice is made and ethical/moral thought arises. Decision making is not dead.

A difficulty I constantly see in this subject area is that many mix individual with social group and species level matters. (Defining the differences between those is itself a major conundrum.) Take the comment by Mbele in this blog article, they mentioned mary and joseph, this could have been interpreted as merely some comment regarding religious resistance, but equally, and because of the lower case and use of language mary and joseph were possibly being presented in the understanding as religious relics and could have been meant as a reference to religious reliquaries. (e.g. The many chapels of bones, or bones of saints – with the chapels exhibiting their own possibly far deeply rooted symbolism). A re-iteration of that concept by the use of a different religions relics (Gold leaf body of holy person who apparently mummified(preserved) their own body when alive) sought to clarify and open a potential for further discussion on that particular perspective, but not the other potentialities.

If one particular areas keywords are used, (e.g. common sense), then the potential for a variety of understandings arises across individuals and social groups. Look to the example and moral common sense – species level morality (as defined by Sidgwick and others, and used by Crisp on this blog) – also allows for the application of that term within different social groups where their interpretation may arise out of a different context. A differentiation between individual, culture, race, or species becomes important in my research area and is mostly accommodated here by the application of ‘social group’ to all social divisions or groups of individuals, because that then promotes the conscious application of more generic considerations as a means of achieving potential understanding(s) and comprehension before any possible application to any particular part of humanity.

A view of the multiplicity or variability of ethics/morality surely does not mean either is dead or has no meaning. Rather the opposite it reveals, as in the museum mummification case, that it remains an active area where individual and social groups are working together towards a fuller broader understanding. The further example of the Downs Syndrome court case and limited discussions there, when additionally also considered from the species DNA perspective, and espoused theory that DNA appears to store certain genes containing traits for potential use in the future (Which in that case could be argued were for stronger empathy and emotional sensitivity.) reveals similar generic issues being considered. At that species type (moral common sense) of level, for me, they do not appear as rules or laws, but rather arise out of real physical and environmental causes which create and allow them to be noticed or imagined, necessitating understanding by the mind but not necessarily fully always based within humanity itself (this does not reflect any interpreted perception of what can at times be called god). In my experience an early engagement at the emotional level more often results in an immediate reflection by the other of a reflectively formed glass ceiling more than a generic feeling of right or wrong. My own use of the terms ethical/moral is a deliberate mental mechanism at the moment, because if you call them duty, rules, laws, constraints or guidance, those immediately form a conceptual base which, like many religions, frequently determines what follows. That dual use emphasises the need to reflect back into the individuals beliefs and lived environment when dealing with this area. The emotional elements are important but because of historical baggage, themselves become cumbersome during many of these circumstances until intelligently deployed after/during achieving some level of comprehension.

If those observational examples regarding the ongoing debates were not indicative of living ethics/morality the museum scientific minds would merely continue with the use of the terms object and exhibit, seeing no need to alter that strictly scientific mode of thought, the Downs Syndrome situation would continue in its historical form, and existing emotional attachments could justify each. But such alterations and questioning of progress does appear in the understanding of both individuals and social groups revealing living systems, which do however tend to become moribund within certain areas, social settings or social groups where a preference arises for familiar answers or an immediate emotional response becomes deployed. So it is my opinion that ethical/moral thought, by those examples, is not dead. Just as I myself have no wish in any way to be.

N.B Many writers go to great length in defining their use of words or concepts and many readers will have become frustrated when following the dialogue in those books containing long and frequent footnotes providing definitions. To achieve some clarity, I prefer to attempt to write clearly about a complex subject without, or with minimal use of socially situated concept keywords often using examples instead, even if that becomes tediously long. Other preferences exist. (Please note the use of attempt in that sentence.) For those who count 900+.

Self Inflicted

I used to think that time would heal old wounds—that obsolescent forms of social organization would be replaced by more harmonious, more evolved, ones. However, this is not what has happened.

I referred to political correctness as suggestive of how humans create and preserve prior social structures. Parents use guilt and fear to force their children to conform to their generation’s social structures and norms. Not all do this, but more than enough to keep primitive forms alive and well—too well to change.

Emotions get attached to early memories. Those memories of upbringing are powerful and hidden. The consequences are twofold. Social change is blocked. A generalized change-avoidance response is inevitable. Change terrifies the programmed. One philosopher (Jean Gebser) thought that human development is an additive process that preserves all prior social forms, i.e., we embody all the cultural forms that our species has ever used or lived through. Whether we call the process maturation or adaptation there seems to be a point when human societies stop learning and adapting to new social realities.

Humanity is locked down. We got this way after evolutionary fitness attained the tribal stage/form. Parental control tactics exhibited during tribalism are still effective at preserving the tribal status quo today. Concepts like morality, social evolution, adaptation, learning, and maturation have long been subverted by the urge to avoid change and bolstered by strong negative emotions implanted during upbringing—what BF Skinner termed “negative reinforcement”.

The Practical Ethics blogger Ian says, “This illustration of moral progress (my response to the article) and how it may occur hinges upon the inquisitive nature and thoughtful reflection about others.”

That sounds like a recipe or prescription. To which I offer… If wishes were fishes, we might have abandoned tribalism by now. However, we failed to dismantle the walls of outdated cultural and systemic ignorance. Put another way, we are broke, and out of options. This is the point where some thinkers tout the importance of being optimistic. That is delusional.

Another recent tactic is to shame the general population and/or their leadership. Shame is another old tribal control trick akin to fear and guilt. Fighting fire with fire adds more kindling, i.e., maladaptive programming that paints us into the corner yet again.

Ian again: A quotation by Hegel reveals a similar type of social issue. Take the famous adjuration of Plato to the stars in the lines:

When thou gazest forth at the stars, my star,

Would that I were the heavens and thence on thee

Could gaze forth out of a thousand eyes.

Now it is billions of eyes, and they are blinkered. They far outnumber the unblinkered eyes that would value cooperation over competition and adaptation over tradition. The blinkered cannot inquire and reflect on anything outside of the corner they have painted themselves into. They/we will not give up the way we are—not even if we wanted to. This form of blindness is self-inflicted at the species level.

“They [the blinkered] far outnumber the unblinkered eyes that would value cooperation over competition and adaptation over tradition.”

Yes blinkered approaches can exist, but in many cases/occasions could that be traced back to preferred responses to difficult/unusual situations when placing reliance upon the known and familiar ground. Is that wrong? Because they exist, those more firmly formed mindsets cannot be wrong, just contextually temporary, or different. Focused approaches exist which consider a return on ideas can only be gained, or a measure applied, by a form of representational currency (a sort of promote and protect), others that the free flow of ideas should not be compromised in any way (a sort of project and protect). These types of approach do compromise and control progress, but are they not also part of social life!

Using the presented argument thread and the Egyptian Pharaoh’s, cannibalistic, or slave (accepting threads of current thoughts on slavery as a free use of the intellectual thought forced out of the individual by others) cultures would continue to exist as no change could happen. Goodness even communications mechanisms (gesture/body language/image/art/audible/written) would not have developed or continue to change. Social cultural changes were said to either happen suddenly (often with great turmoil) or slowly over time. But it would be very unusual if those two routes to change were totally isolated and did not mix with and be affected by each other and others. That reflects previous intimations that the interaction between individuals promotes/creates ideas more readily (something that could be disputed by looking to the measuring mechanisms), but conceptually appears equally applicable to social life.

Surely complexity has to be incorporated within any generic thought about these areas, rather than denying the validity of that complexity and ending up with a moribund theory lacking any wider vitality, and then fitting all difference/dissent into a labelled group called blinkered or similar, rather than attempting to understand.

Those in a hurry do express valid views which may be different, and often look to swifter approaches to achieve their own objectives, sometimes as a means of avoiding any broader scrutiny, often because scrutiny by others dilutes the cause, and from a rushed perspective probably involves engaging with people who do not immediately understand. Why do they not comprehend? Could it be that what is often perceived as new or old is merely a reflection of known material which is not sufficiently generic to accommodate the broader audience/environment, or do egoistic politics and self interest always arise.

Having observed all that, there could be some agreement that moving large social groups of individuals relies upon the type of blinkering explained in the ideas you present, something that becomes promoted by many people and sectors who wish to be able to achieve such social movement. And that a great determinations is required by individuals who do have different or new ideas if those are to be progressed to some form of comprehension, because distractions will arise and there will be resistance.

Only if a communication becomes broadly understood in the space of ideas does sometimes a semblance of what could be perceived as generically focused discussion appear. Otherwise that boring form of self-interest/group interest which is mentioned above becomes perceived as taking control of the volume. (Volume (also as in attention grabbing) used roundly, reflectively, and with humour.) Thank you for the discussion, and the Blog article author for his patience, which I hope was rewarded in some way.

Psychologists call this “cognitive rigidity”.

Very good. Not having studied psychology ( I had one course in school), my term for this would be cognitive dissonance. The adjectives, while dissimilar in meanings, convey a disconnect, seems to me. Our fascination with interdisciplinarianism necessitates an ever-expanding arsenal of terms. Command of that arsenal is getting more and more problematic. Complexity is passively relentless.

Cognitive Rigidity seems to lend itself to Dennett’s discussion of Zombies—a term most of us know, and Zimboes, one he made up, not that long ago. He turned that over, several different ways. Notice, the words contain the same letters, with only one vowel transposition. The professor made this up—or, did he? Did he only put it in our faces, to see if someone might ‘get’ it. He attracts controversy, because he jumps-out-of-the-system; challenges conventional thinking about things; writes, in a way, that people find accessible, insofar as the modern word-salad of philosophy is not his style. I do not participate/comment in these blogs, with any intention of ‘ making a difference, that makes a difference’. That was twentieth century idealism .As much as other twentieth century idealisms. It is disturbing to have lived across centuries. I have already addressed that conundrum elsewhere. If anyone here thinks this is a competition, that is wrongheaded, on its’ face.

Firstly let me apologize as the following may make people queasy.

Q: Is a person still a person after they are dead?

There are various answers some of which such as “worm food” whilst describing a natural process of decay, is not something most want to think of when remembering a person.

That is there is a temporal cognative dissociation in place.

Now consider the meat fridges in your supermarket,

Q: do you see anything that looks like the animal or even parts of it?

Appart from chickens that are presented upside down and the occasional full leg of lamb, probably not.

When I was a little under six years old I helped in the slaughter, cleaning, butchering, sausage and pudding making involved with turning a pig that had a name, and that I’d helped feed and got to know. I was like most small children not at all perturbed by this and quite happily ate the meat and the following year get to know the next pig.

I learnt to disassociate the live animal with obvious personality with the meat on my plate.

More than half a century later this sounds utterly barbaric to most in society. Yet for my generation and generations going back thousands of years prior to that it was a societal norm in just about every household in one way or another.

It concerns me not that we are loosing traditions, but actually the ability to live within a natural environment.

As part of my upbringing by what were upper middle class well incomed parents of the time, who had fought through WWII. I learnt how to make nearly all seasonal foods “shelf stable” such that fridge and freezes and other horrendously energy guzzling technology were not required.

I could say society has become soft or similar daft comment, but the reality is technology is making us increasingly very un self reliant. Very dependent and thus way to easy to control just like a herd of cattle etc.

But it’s more than just a loss of skills, we are also loosing our mental defences. Thus the likes of “Political Correctness” and “Virtue signalling” become the new religion to which we are required to genuflect to or be cast out for herasy. By those who lets be honest would have been laughed at or ridiculed unmercifully just a few generations ago.

That is we are willingly giving power and control over us to people who largely are unfit to have it.

If you trace it back you will find a strong correlation to the increasing use of technology for petty things.

Thus we are increasingly prone to all manner of “fake” not just “fake news”

Some may know of ChatGPT and what a monstrosity it is. If I told you that what was Facebook and now calls it’s self Meta has developed a version for personal use[1] called LLaMA-13B.

Thus my first question above is going to become obsolete and so should be,

Q: Is a person still a person after an AI can reliably impersonate them?

Especially when you also know AI can reproduce your voice sufficiently well[2] for your bank account to get cleaned out.

Oh and do not forget some in the Mega-Corps of Silicon Valley of which Meta is just one, are chasing life eternal. Some we are told are effectively vampires draining blood from those still in their teens to be transfused into billionairs veins. Such people would willingly spend billions if they could still exist even in virtual form…

[1] https://arstechnica.com/information-technology/2023/02/chatgpt-on-your-pc-meta-unveils-new-ai-model-that-can-run-on-a-single-gpu/

[2] https://www.vice.com/en/article/dy7axa/how-i-broke-into-a-bank-account-with-an-ai-generated-voice

Outstanding, CR. (I do not use the word awesome in polite conversation now. It is just another example of exaggeration, excess and extremism.) Your assessment is pure pragmatism. James and Rorty would smile. More useful is better than less.

Comments are closed.