This essay, by Oxford undergraduate student Dillon Bowen, is one of the two finalists in the undergraduate category of the inaugural Oxford Uehiro Prize in Practical Ethics. Dillon will be presenting this paper, along with three other finalists, on the 12th March at the final.

The Economics of Morality: By Dillon Bowen

The Problem

People perform acts of altruism every day. When I talk about ‘altruism’, I’m not talking about acts of kindness towards family, friends, or community members. The sort of altruism I’m interested in involves some personal sacrifice for the sake of people you will probably never meet or know. This could be anything from holding the door for a stranger to donating a substantial portion of your personal wealth to charity. The problem is that, while altruism is aimed at increasing the well-being of others, it is not aimed at maximizing the well-being of others. This lack of direction turns us into ineffective altruists, whose generosity is at the whim of our moral biases, and whose kindness ends up giving less help to fewer people. I propose that we need to learn to think of altruism economically – as an investment in human well-being. Adopting this mentality will turn us into effective altruists, whose kindness does not merely increase human happiness, but increases human happiness as much as possible.

For the first section, I explain one morally unimportant factor which profoundly influences our altruistic behavior, both in the lab and in the real world. In the next section, I look at decision-making processes related to economics. Like altruistic decision-making, economic decision-making is also burdened by biases. Yet unlike altruistic decision-making, we have largely learned to overcome our biases when it comes to resource management. Continuing this analogy in section three, I express hope that we can overcome our moral myopia by thinking about altruism much the same way we think about economics.

1) Biases in Altruistic Decisions

When I claim that altruistic decisions are biased, I don’t simply mean that moral judgments rely on factors other than maximizing happiness. Rather, moral judgments rely on factors other than maximizing happiness which are obviously morally unimportant upon even minimal reflection. Let me give you an illustration:

“Imagine you’re on vacation at a cottage on top of a mountain overlooking a village. One day a typhoon hits, destroying the village and leaving hundreds of people without food, water, or shelter. The destruction has made it impossible for you to travel down the mountain, but you can still help by making an online donation to the disaster relief organization working to assist them.

Do you have an obligation to help?”

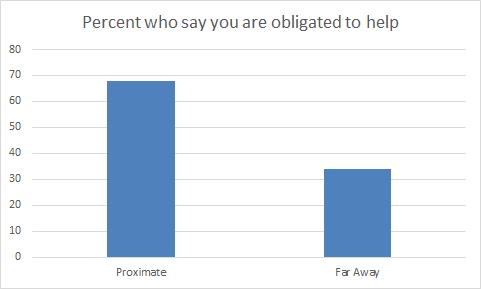

Something like this script was given to half of a Harvard psychology class by Jay Musen. To the other half he gave a similar script, only instead of witnessing this carnage from the top of a nearby mountain, students were instructed to envision themselves at home, watching the destruction unfold on television. In both scenarios, subjects have an equal ability to help the unfortunate villagers. The only difference is that, in the former scenario, the suffering you witness is up close and personal, whereas in the latter, the suffering is far away. And yet, 68% said that you have an obligation to help in the first case, compared with 34% in the second. This study demonstrates an important cognitive bias: people who are physically proximate appear more morally important[1].

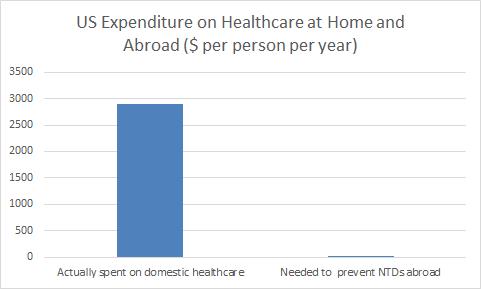

The physical proximity bias is borne out in real-world moral decisions. In 2011, the US government spent over $900 billion domestically on healthcare (~$3,000 per capita)[2]. Compare this with the cost of preventative treatment for schistosomiasis and other neglected tropical diseases (NTDs). Prophylactic pills can inoculate those in the developing world against a variety of parasites for just $0.54 per person per year[3]. With 400 million annual treatments, NTDs could be virtually eliminated[4]. Yet by 2016, charities working for schistosomiasis prevention will have the resources to issue only a quarter of the necessary treatments[5]. Simply put, the US has approved of spending $3,000 per person per year on healthcare for those at home, but has yet to approve of spending even $0.54 per person per year on healthcare for those far away.

Clearly, physical proximity matters. But should physical proximity matter? I suggest that the physical proximity bias really is a bias, in the sense that geographic displacement is not the sort of thing we think we should care about when we give it a moment’s thought. Imagine a solicitor asks you for a donation to, say, feed starving children. It would be quite odd, to put it mildly, if you responded with, ‘Well, that depends. How far away from me are these children you’ll be feeding?’

The physical proximity bias is just one of many cognitive biases that skew our moral decisions away from maximizing happiness based on obviously unimportant factors. However, we don’t see these biases as hindering the goal of our altruistic behavior, largely because we don’t see the goal of our altruistic behavior as maximizing well-being. In what follows, I draw an analogy between our moral decisions and our financial decisions. Both are plagued by myopic cognitive biases. But importantly, we explicitly evaluate our financial decisions in terms of monetary yield. This goal allows us to identify features of our cognitive systems which prevent us from making fiscally prudent choices, and find ways to overcome them.

2) Overcoming Economic Biases

When people invest in the stock market, their primary goal is to make as much money as possible. We know this because a massive industry has grown to ensure that stock investments yield optimal returns, stocks which yield poor returns quickly die out, and investors explicitly consider it their fiduciary duty to maximize profit for shareholders. When people give money to charity, or formulate opinions on how their tax dollars ought to be spent, their primary goal is not to help as many people as possible. We know this because only a tiny fraction of donors have even heard of organizations like GiveWell, ineffective charities thrive alongside charities which are more effective by orders of magnitude, and people rarely seem to consider the size of the impact of their philanthropy.

There are many biases which hinder our efforts to make optimal economic decisions, such as the gambler’s fallacy or loss aversion. And when it comes to our fiscal health, we recognize that these biases are bad. We understand that, for example, having a successful fund for your child’s college education depends on overcoming psychological mechanisms favoring impulsive spending. As we’ve seen, there are also manifold biases that prevent us from making altruistic decisions effectively. But when it comes to our moral decisions, people often fail to see these biases as in any way problematic. Most importantly, people fail to appreciate that the fate of billions of people in the world today – and potentially the entire future of humanity – depends on us finding a way to overcome these biases.

As humans, we tend to underestimate the value of rewards and punishments based on how far into the future they will occur. This bias is known as ‘temporal discounting’[6]. For instance, people will often prefer a smaller, more immediate sum of cash to a larger sum of money which they will receive at some point in the future. This is an obvious impediment to certain financial goals, like saving for retirement. Yet, in spite of temporal discounting, many people somehow succeed in long-term monetary planning.

Perhaps people who are fiscally prudent are immune to temporal discounting? But no, this hypothesis is not borne out by the psychological literature. Rather, the conflict between immediate and delayed gratification involves two largely independent cognitive processes[7]. One – the fast, emotionally charged process – generates a strong visceral desire for immediate reward. The other – the slower, emotionally cooler process – responds to abstract reasons. Things such as saving for retirement require our slower, reasoning processes to supersede our faster, intuitive processes.

For the final section, I suggest that we should take these lessons from economics and apply them morality.

3) Overcoming Moral Biases

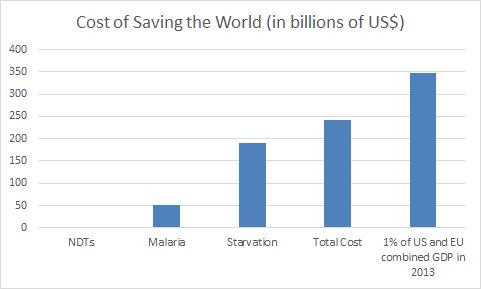

Global rates of starvation could be drastically reduced over the next decade for $190 billion[8]. Malaria could be virtually eradicated in the next 10 years for $51 billion[9]. Most of Africa could live without fear of common tropical parasites in the next 7 years for $1.4 billion[10]. The combined cost of these projects could be paid for by the US and EU in a single year for less than 1% of their GDP[11].

Sadly, these projects will not receive the funds they need, largely due to the fact that the goal of altruism is not to maximize well-being. Failing to take this as the summum bonum of our philanthropy leads us to be ineffective altruists. We need to revolutionize how we think about acts of kindness. Instead of thinking emotionally, we need to think economically. Altruism should be explicitly viewed as an investment in the well-being of conscious creatures. And we should demand nothing less of ourselves than to see our investment yield maximum returns.

Adopting this mentality will turn us into effective altruists. At a minimum, effective altruism says that to the extent we care about helping others, we should try to help as many people as possible as much as possible. Once we adopt this perspective, many ostensibly innocuous intuitions present themselves transparently as biases. Just as temporal discounting impedes our ability to care about the future of our finances, I predict similar psychological mechanisms will impede our ability to care about the future of humanity. Intuitively, future suffering just seems less morally important than current suffering. And so we have less emotional incentive to invest in things like sustainable energy, nuclear disarmament, the safe development of AI, and epidemiological preparedness for super-viruses.

As effective altruists, we recognize that temporally discounting the suffering and happiness of billions – perhaps trillions – of our descendants is a bad thing. This is where our reasoning processes will save us. Once we have acknowledged our biases, the next step is to overcome them.

Now, we might think that overcoming, for example, temporal discounting for the purposes of long-term financial planning is a matter of expanding our cognitive capacity to represent all the resources we will need after our working years. If only we could represent large sums of cash in the future as if they were immediately available, our problems would be solved. But this is not what the brain does. Reasoning processes are not like intuitive processes with more RAM. Instead, reasoning processes represent information more abstractly and symbolically.

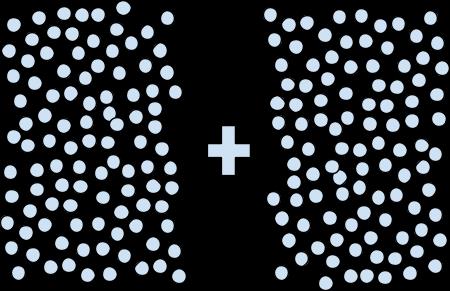

To illustrate this point, try adding these two groups of dots:

Unless you’re an autistic savant, you probably can’t do it without counting each one individually. This is because the brain has limited capacity to represent quantity concretely. We can hold only about seven objects in working memory at a time[12]. Now try the equivalent math problem where the dots are represented abstractly as Arabic numerals:

100 + 100

Easy.

Just as symbolic representations of quantity allow us to do math with numbers greater than 7, learning to represent suffering and happiness symbolically will allow us to better care about people who live beyond our borders and beyond our lifetimes. We are probably all familiar with the received wisdom that people ought to be treated as individuals, not statistics. I think this ‘wisdom’ is fundamentally wrong. Our brains simply lack the capacity to empathize with every individual in the world. If we are to properly care about everyone, we need to think of human well-being as a statistical measure of happiness and suffering.

We don’t need to be able to intuitively conceptualize large quantities of things in order to do math, and we don’t need to be able to empathize with the suffering of large quantities of people in order to care about them. Instead of understanding the pain of individuals, we should make an effort to understand abstractly the pain of the masses. This is the key to discovering and overcoming our moral biases. We need to recognize the immense amount of suffering in the world today, as well as the vast potential for happiness in the future, and take it as our mission to make the world as happy a place as it can possibly be.

Works Cited

Fenwick, Alan. “My Story.” Just Giving. Schistosomiasis Control Initiative, n.d. Web. 17 Jan. 2015. https://www.justgiving.com/sci

GiveWell. “Schistosomiasis Control Initiative (SCI).” GiveWell. Nov. 2014. Web. 17 Jan. 2015. http://www.givewell.org/international/top-charities/schistosomiasis-control-initiative

Green, L., Fry, A. F., & Myerson, J. (1994). Discounting of delayed rewards: A lifespan comparison. Psychological Science, 5, 33-36.

Madden, G. J., Begotka, A. M., Raiff, B. R., & Kastern L. L. (2003). Delay discounting of real and hypothetical rewards. Experimental and Clinical Psychopharmacology, 11, 139-145.

McClure, S. M., Laibson, D. I., Loewenstein, G., & Cohen, J. D. (2004). Separate neural systems value immediate and delayed monetary rewards. Science, 306, 503-507.

Metcalfe, J., & Mischel, W. (1999). A hot/cool-system analysis of delay of gratification: Dynamics of willpower. Psychological Review, 106, 3-19.

Miller, G. A. (1956). “The magical number seven, plus or minus two: Some limits on our capacity for processing information”. Psychological Review 63 (2): 81–97.

Musen, Jay. “Moral Psychology to Help Those in Need.” Thesis. Harvard University, 2010. Print.

National Center for Health Statistics. Health, United States, 2013: With Special Feature on Prescription Drugs. Hyattsville, MD. 2014. http://www.cdc.gov/nchs/data/hus/hus13.pdf

O’Brien, Stephen, comp. “Neglected Tropical Diseases.” (2008-2009): n. pag.World Health Organization. World Health Organization. Web. 17 Jan. 2015. http://www.who.int/neglected_diseases/diseases/NTD_Report_APPMG.pdf

Roll Back Malaria. Key Facts, Figures and Strategies: The Global Malaria Action Plan. Geneva: Roll Back Malaria Partnership, 2008. RBM. World Health Organization, 2008. Web. 17 Jan. 2015. http://www.rbm.who.int/gmap/GMAP_Advocacy-ENG-web.pdf

Schistosomiasis Control Initiative. “Schistosomiasis Control Initiative.” Imperial College London, 2010. Web. 20 Nov. 2014. https://workspace.imperial.ac.uk/schisto/Public/SCI2010.pdf

United States Census Bureau. “Annual Population Estimates.” State Totals: Vintage 2011. United States Census, n.d. Web. 19 Jan. 2015. http://www.census.gov/popest/data/state/totals/2011/

World Bank. “World Development Indicators.” Data: The World Bank. The World Bank, n.d. Web. 15 Jan. 2015. http://data.worldbank.org/indicator/NY.GDP.MKTP.CD

World Game Institute. “Strategy 1: Famine Relief, Fertilizer for Basic Food Production, and Sustainable Agriculture.” UNESCO, 1998-2001. Web. 17 Jan. 2015. http://www.unesco.org/education/tlsf/mods/theme_a/interact/www.worldgame.org/wwwproject/what01.shtml

[1] Musen 2010

[2] According to the National Center for Health and Statistics, the US spent $2.3 trillion on healthcare in 2011 (2014, p.5-6). Of this, 22.9% was paid by Medicare and 16.4% by Medicaid for a total of $900 billion. The US population of this year was 311 million (United States Census Bureau ‘Annual Population Estimates’) for a total of $2,700 per capita.

[3] Schistosomiasis Control Initiative 2010, p. 11

[4] Fenwick ‘My Story’

[5] GiveWell 2014

[6] Green et. al. 1994; Madden et. al. 2003

[7] Metcalfe & Mischel 1999; McClure et. al. 2004

[8] World Game Institute 1998-2001

[9] Roll Back Malaria 2008, p.13

[10] O’Brien 2008-2009, p.11

[11] In 2013, the GDP of the US was $16,768,100 million, and the GDP of the EU was $17,958,073 million (World Bank ‘World Health Indicators’). The total cost of these three projects is $242,400 million, making it 0.698% of their combined GDP in 2013.

[12] Miller 1956

The essay begs questions regarding proximity. For one thing, it assumes that physical proximity is doing the work in the different responses people give. I would guess that there are a bunch of different proximities that matter – historical, cultural, familial, emotional, linguistic, religious, political… – these might all be part of the observed variegated response. By treating physical proximity as the only one that matters, the essay ignores potentially stronger cases in favour of differentiation. [I agree that physical proximity on its own is of little value – I didn’t value my family any less when I lived on the other side of the world from them; and I can’t think of a reason why I should.]

Also, the essay simply presupposes that we all ought to value all individuals equally, irrespective of our relationship with them. I cannot imagine why a rational agent would do this. Some people are our friends; others are our enemies (they wish to do us harm). Imagine you are the mayor of Samarkand on the eve of Mongol conquest.* The Khan is going to assemble and murder everyone in your city, partly to experience the sensory pleasure of stacking all the severed heads in a big pyramid (a big victory for art-for-art’s-sake, I guess…). Would anyone seriously suggest that the mayor should place the same weight on the well-being of the Khan’s army as he places on his own people? I don’t see why I wouldn’t play favourites – giving higher weight to those with whom I share more in common. (I can see – in a few highly idiosyncratic situations – why some institutions might choose to weight all individuals equally: a global government or universal church might want to take that view, since it is (nominally) acting in the interests of all. But since I’m neither pope nor global dictator, this odd view is not one I have to embrace.)

*Or similar. There are lots of examples to choose from.

Hi Dave,

First, thanks for the comments.

Second, with regards to Jay Musen’s experiment, I do think physical proximity is the most plausible explanation to account for the data. Neither the physically proximate and physically distant manipulations mentioned anything about cultural, linguistic, emotional, political, or religious differences. So it would seem odd if subjects implicitly assumed the villagers were say, more culturally similar to them in the physically proximate manipulation as opposed to the physically distant manipulation, and thereby assigned them more moral weight.

However, if what you mean is that physical proximity is probably not the only reason for the discrepancy in domestic vs. foreign US health care spending, I fully agree. I think there are lots of biases which factor into this data – I would predict tribalism and racial biases are also driving factors, perhaps more so than physical proximity. But the essay had a 2,000 word limit, and I didn’t consider a thorough investigation of the psychological causes behind US health care spending to be one of my crucial points.

Finally, I would note that whatever biases are driving this difference, the point of the anecdote was to provide an example of how people’s moral decisions are guided away from maximizing happiness on the basis of obviously morally unimportant factors. So yes, I think people are biased against those of other races, cultures, religions, political orientations, etc. too. But again, I didn’t mention these because I just needed one case study to illustrate my point, and the essay had a 2000 word limit.

Third, I don’t mean to be advocating utilitarianism in the sense that we ought to value the well-being of all individuals equally irrespective of our relationship to them. I thought I made this extremely clear in the first three sentences, in which I say, “When I talk about ‘altruism’, I’m not talking about acts of kindness towards family, friends, or community members. The sort of altruism I’m interested in involves some personal sacrifice for the sake of people you will probably never meet or know.”

Basically, I’m arguing that to the extent we care about the well-being of individuals who have no direct impact on our lives (e.g. impoverished people in South America or Africa), we should try to improve the lives of as many people as possible by as much as possible. For example, imagine you want to donate $100 to say, feed starving children in Africa. All else being equal, don’t you want to ensure that you donate to a charity which uses your money effictively to save as many children as possible? For example, if charity A can use your money to save 2 lives, and charity B can use your money to save 20 lives, you ought to go with charity B. I’m making a much weaker and more palatable point than you take me to be.

Best,

-Dillon

Thanks for the reply, Dillon.

This reminds me a bit of debates over cosmopolitanism – there are strong versions, which are implausible in the same sort of Godwin-ish way that strong versions of EA are, and there are weak versions, which are potentially so anodyne as to be not much more than platitudes. The weak version of effective altruism strikes me as being highly contingent – to couch the argument in terms of “IF you want to improve human welfare through altruism…”. The second step is to restrict the scope of altruism so it rules out the bulk of what most people would think of as the networks along which altruism might apply – family, community, etc. Strong versions ditch the contingency and make it sound as though the sole object of altruism ought to be the maximisation of (instantaneous*) human well-being. Strong versions also elide discussion of obligations to family and community; presumably because they know they won’t make much headway on that.

So yes – given where you eventually take your argument towards the end of your response – that’s fairly palatable. But that’s a long way from what you say in the essay: “these projects will not receive the funds they need, largely due to the fact that the goal of altruism is not to maximize well-being. Failing to take this as the summum bonum of our philanthropy leads us to be ineffective altruists. We need to revolutionize how we think about acts of kindness.”

There’s more than a whiff of motte and bailey about the effective altruist movement. When pressed, they retreat to fairly unassailable arguments about wanting to see more lives saved per dollar. But when not pressed, they advocate a sort of wholesale revolution in the way we think about other people. I find that a bit disingenuous (and I’m not alone – even people employed in the effective altruism cause have expressed disquiet about this propensity).

*I’ve heard very little from effective altruists about the issues that arise when one takes an intergenerational view – nothing about investment in girls’ schools or skills or contraception or Rawlsian inequality – so I feel fairly well justified in calling it instantaneous.

Thanks for your reply to my reply!

I fully agree that there are different ways of interpreting effective altruism, and I’m sorry if it seems I’m equivocating or using a motte-and-bailey disingenuously. The version of EA I endorse is somewhere between the weak interpretation I mean to argue for here and utilitarianism. Basically, I think that to the extent we care about the well-being of others (friends, family, community members in a separate category), we should try to improve the lives of as many people as possible by as much as possible (and when I say ‘people’, please think of this as a shorthand for ‘all creatures capable of experiencing happiness and suffering’). Further, I believe that, if people had greater introspective access to their own preferences, they would realize that they care more than they currently think about helping others. I’m not a utilitarian in the sense that I consider it unlikely that any amount of accurate introspection would reveal to us that we actually don’t value friends, family, and community members more than everyone else.

But in this essay, I only mean to advocate for the weakest form of EA. I’m sorry if this wasn’t sufficiently clear, but I meant to start the essay by clarifying that when I talk about ‘altruism’ in this context, I mean ‘acts of kindness towards people who you’ll never meet or know – friends, family, and community members in a different category’. So in the passage you quoted, try rethinking it using the definition of altruism I set forth at the beginning of this paper, and I think you’ll find my position is entirely consistent.

As far as an intergenerational view of EA, from my experience, most of our community values future people equally to existing people. I know Andreas Kappes and Guy Kahane (who work at the Uehiro Center) are looking into how ordinary people weigh future vs. existing people. But I have yet to hear anyone in the EA community voice a preference for existing people. I don’t doubt that there are such people in the EA community, but I would bet they’re in the minority.

Best,

-Dillon

Hello Dillon

You write : “Instead of understanding the pain of individuals, we should make an effort to understand abstractly the pain of the masses”

I trust you don’t really mean “instead of” ?

If you mean “as well as”, I still disagree with you, partly for the same reasons as Dave. But if you really mean what you wrote, I’m aghast. (Besides, it wouldn’t be a very original thought : Stalin, among others, could probably sue you for plagiarism and claim your prize should you win.)

I think the context of the statement makes it clear that I’m not saying anything like Stalin, and I find your comparison deeply uncharitable.

What I mean is that moral decisions should be guided more by abstract mental representations of the suffering of large quantities of conscious creatures, rather than concrete mental representations of the suffering of a small number of individuals. I’ve recently warmed up to the idea that intuition has an important role to play, and I consider that role motivational. We should be motivated by our concern for the well-being of individuals, but when it comes to making decisions about where we donate or how our governments ought to spend money on public goods, the crucial question we need to be asking is, ‘What can I do to alleviate as much suffering as possible?’.

Best,

-Dillon

Thanks for your reply Dillon,

I reserve the right to be uncharitable towards a view that we should *replace* understanding of the pain of individuals by an abstract understanding of the masses (family, friends or community members excepted).

And I am very happy that you make it clear that you don’t actually mean it.

You’ll no doubt consider me a terrible pedant, but I do believe that in philosophy one should be as precise with words as one can….

I don’t deny that you have a *right* to be uncharitable – I just think you exercised it poorly in this case. The statement was made in the context of psychology and mental representations – not some Stalinesque polemic against individual rights. If you thought my statement could have been better phrased, next time please just tell me that and give me a chance to clarify myself *before* you compare me to a genocidal maniac.

When you quote me out of context like that, I agree that I sound dangerously ambiguous. Clarity is something I continuously struggle with as a philosophy student, and I know I can do better. And in this case, perhaps I should have done better, and you should feel free to point that out.

But good philosophers strive not only to be precise in their own writing, but to charitably interpret the writings of others. You’ll no doubt consider me a terrible pedant, but I do believe that in philosophy one should make an effort to understand what an author means in context, even if his/her writing could be more precise in certain respects. So it seems we both have things to work on.

Dillon, great essay. Your logic flowed well and I enjoyed the thought you put into this. It allows us to reevaluate what we do with charitable contributions and how we can help out those in need in a more efficient manner. I hope that this is more than merely a philosophical topic; that this thinking will turn into actions taken by others as well as yourself.

I am a student at Clemson University in the US graduating later this year, and I am looking to do something that has meaning, to satisfy my desire to help and provide what I can to those that need it. I am unsure of where life will take me, but I hope to find something worth pursuing. I bring this up to encourage you as well since you seem to have an understanding that there are others out there less fortunate and regardless of location, could use our time/money/attention/whatever skills or assets that we have to offer. Also, I am studying Accounting and do not plan on really using that if I take this path, so also a reminder that where you build knowledge should not restrict what you do with your life. However, take what you learn and apply it towards the betterment of your life and others.

All the best,

Pete

Love the article. You did a great job assimilating different situations to create a full understanding of the picture you were painting.

Going off of you cognitive bias of proximity, I believe the cognitive bias of proximity is due to the cognitive bias humans have with humans that are similar to each other. If you look at racism, nationalism, and sexism they are based off of the thought that one group of individuals is noticeably different then another. Therefore, individuals that believe these differences of the groups matter don’t find it optimal to help someone of the other group. Thus, helping the group they assimilate to no matter what the actual effectiveness is more important. Moreover, the thought of helping the human race as a whole isn’t the main goal in an altruistic situation for these individuals. Going back to your story, the individual at the top of the mountain isn’t distraught like that of the individuals that have had their village destroyed so the individual finds a difference between the villagers and himself/herself. He does however find a similarity in the fact that the villagers live close to him, therefore causing him to have a feeling of obligation. In the case of the people that were not living at the top of the mountain, they find neither of these similarities so they feel even less obligation. The only true noticeable obligation variable known without further information on the story is that both the individual and the villagers are homo sapiens.

For the obligation discrepancy to be eliminated people would have have the same similarities on all levels.

The debate as presented (and argued within the comments) does not appear to make room or account for the wishes of the charitable recipients. Applying strictly logical (in the above case financial rules) criteria to morality does present a very real problem of imposing a solution which may be unwanted upon people one does not know, as a means of salving our own sensitivities. When determining the value of aid we may need to ensure we define what value is most valuable to any recipient(s). In an increasingly logically constructed world this facet would seem to be becoming more important every year. How would you see that element included?

(Old email as no current email address)

Comments are closed.