By Matthew L Baum

“A test could indicate whether people in their 40s are more likely to develop dementia later in life, scientists say. But wouldn’t many of us rather not know?” reads the picture caption from a recent BBC News Magazine article .

The article addresses a study from Brunel University led by Dr Bunce . The researchers show that small areas of damaged neural tissue called White Matter Hyperintensities (WMH), which are present in and associated with level of cognitive impairment in dementia patients, are also present in and associated with level of cognitive function in reasonably healthy people aged 44-48. In more detail, middle aged individuals with WMHs in their frontal or temporal lobes exhibited poorer performance in an attention test (reaction time to press one of two buttons when given a light-signal indicating which) and memory tests (picking out words or faces in a previously learned series) respectively. The correlation between these WMHs and cognitive function remains after correction for a range of health indicators from head trauma to vascular risk.

As the authors point out (and the BBC journalist seems to ignore), there are several major shortcomings to this study:

1. The study can make no claims to whether the WMHs cause the cognitive deficits because the experiment is cross-sectional and descriptive.

2. There is no data as of yet in regards to which of these patients will go on to develop dementia (and whether the ones with WMHs have an increased risk).

3. The number of individuals identified with WMHs is quite small, despite being the largest study of its kind.

At this point you may be thinking, like I was when I finished reading the original research article, “Ok. Now where’s the test?”

To say, as the journalist does, that the study “does not offer a cast-iron prediction that the patient will develop or be free from dementia” is at best an understatement. The cast is not yet made, the iron not yet mined or smelted, and the test and prediction still a matter of future technology. To draw an analogy, imagine that rain in the afternoon is associated with number of afternoon clouds; we have now identified that some skies have clouds in them in the morning. But there is no telling as of yet that skies with a handful of clouds in the morning will go on to develop more and more clouds and then to rain in the afternoon. It is reasonable that these morning clouds will dissipate, or stay the same number, or that the clouds responsible for afternoon rain are formed in the afternoon. Here, the number of WMHs in 40-somethings’ brains might stay constant, might go away, and these people may never go on to develop dementia; it could be possible that both the WMHs and the dementia seen in 70-somethings come on together later and independently of whether WMHs are present at 40.

But media exaggeration of scientific findings is a topic for another day.

This study should be applauded as excellent work and an important stepping stone in crossing the river towards a predictive test.

Professor Bunce very articulately outlines the study’s strengths :

“First, the study is one of the first to show that lesions in areas of the brain that deteriorate in dementia are present in some adults aged in their 40s.

“Second, although the presence of the lesions was confirmed through MRI scans, we were able to predict those persons who had them through very simple to administer measures of attention tests.”

There is reasonable data suggesting that further research may lead to ability to predict dementia. For example, poor performance in one of the attention tests in this study associated with WMHs has some predictive value for who will develop mild cognitive impairment, which in turn sometimes proceeds full dementia .

What really is required is for Bunce et all to track this cohort over time to see if those 40-somthings’ with WMHs are more likely to develop dementia, a study this group is currently engaged in.

So though this study does not support a test with predictive validity for dementia yet, it is likely that one will in the near futre. If it does, would you want to get tested? Answer this question in your head, then read on.

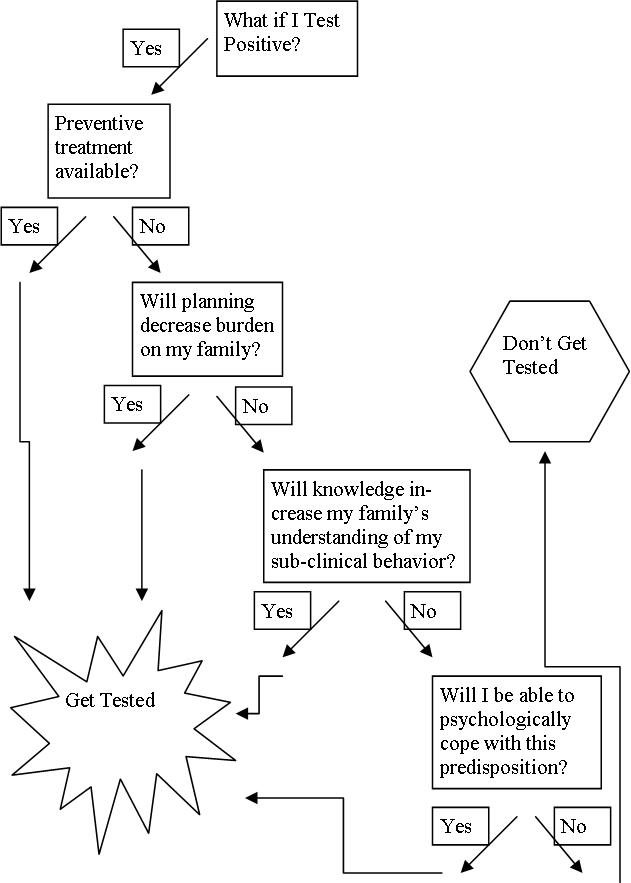

The most interesting part of the BBC article is its comments section, in which readers answer just that question. It seems that the differences in opinions depend upon perceived ability to:

1. Prevent or delay dementia:

a. “if I can change something to stop or slow it I would.”

2. Decrease burden to family:

a. Planning: “I’d want to know and make what plans I can whilst I still have the mental faculties available to myself to do so.”

b. Explaining subclinical behavior: His behaviour prior to our divorce put such a strain on our marriage […] Had I known, however difficult, I would have stayed with him to look after him.”

3. Cope with prediction worry:

a. “I don’t know how I would cope knowing what would potentially happen to me.”

I have created a rough decision tree below to visualize a possible decision making process with these principles. A designation as “no” means either the answer to the question is no, the person doesn’t consider it to be important, or the person does not consider the principle in her decision process. The one principle I have left out for clarity is that of discrimination (whether insurers or employers would have access to and ability to discriminate against you because of the test). For now, let us assume that the policies are in place to prevent discrimination and that the test is voluntary.

So how about now? Would you get tested? Are there any other factors that would matter?

So far we have been talking about a personal decision. According to the Alzheimer’s society, however, in the UK dementia afflicts 750,000 people (expected to be over a million by 2025), kills 60,000 per year, and costs £20 million per year. Moreover, it is estimated that “delaying the onset of dementia by 5 years would reduce deaths directly attributable to dementia by 30,000 a year.”

The NHS would have a significant financial and moral reason (assuming the 5 years delayed were of good quality) to mandate screening and preventive treatment. If treatment that would prevent or delay onset were available, support for planning and coping given, and education provided to care-givers, would the above reasons justify the coercion to screen and intervene in the population if a test supported by sound evidence becomes available?

Thank you for this interesting post.

To answer your two questions :

1. Yes I would take the test, provided that the risk of error was proven to be very small : and this for reason 2 evoked in the BBC comments.

On the other hand, nothing that you write, or that I have seen elsewhere, suggests that we are for the moment anywhere near preventing or delaying the onset of dementia. This means that I reject reason 1 as a ground for a test.

2. No, I do not believe that society has a right to mandate screening.

A. on grounds of freedom of choice

B. on the grounds that although this freedom could in rare cases be outweighed in the case of a threat to others’ health – for example, compulsory vaccination against smallpox, I cannot see that the case of dementia falls into this category.

I would hold this position even if it were clearly established that treatment that would prevent or delay onset were available, that support for planning and coping were given, and that education were provided to care-givers.

Comments are closed.