By Julian Savulescu

Together with Tom Douglas and Ingmar Persson, I launched the field of moral bioenhancement. I have often been asked ‘When should moral bioenhancement be mandatory?’ I have often been told that it won’t be effective if it is not mandatory.

I have defended the possibility that it could be mandatory. In that paper with Ingmar Persson, I discussed the conditions under which mandatory moral bioenhancement that removed “the freedom to fall” might be justified: a grave threat to humanity (existential threat) with a very circumscribed limitation of freedom (namely the freedom to kill large numbers of innocent people), but with freedom retained in all other spheres. That is, large benefit for a small cost.

Elsewhere I have described this as an “easy rescue”, and have argued that some level of coercion can be used to enforce a duty of easy rescue in both individual and collective action problems.

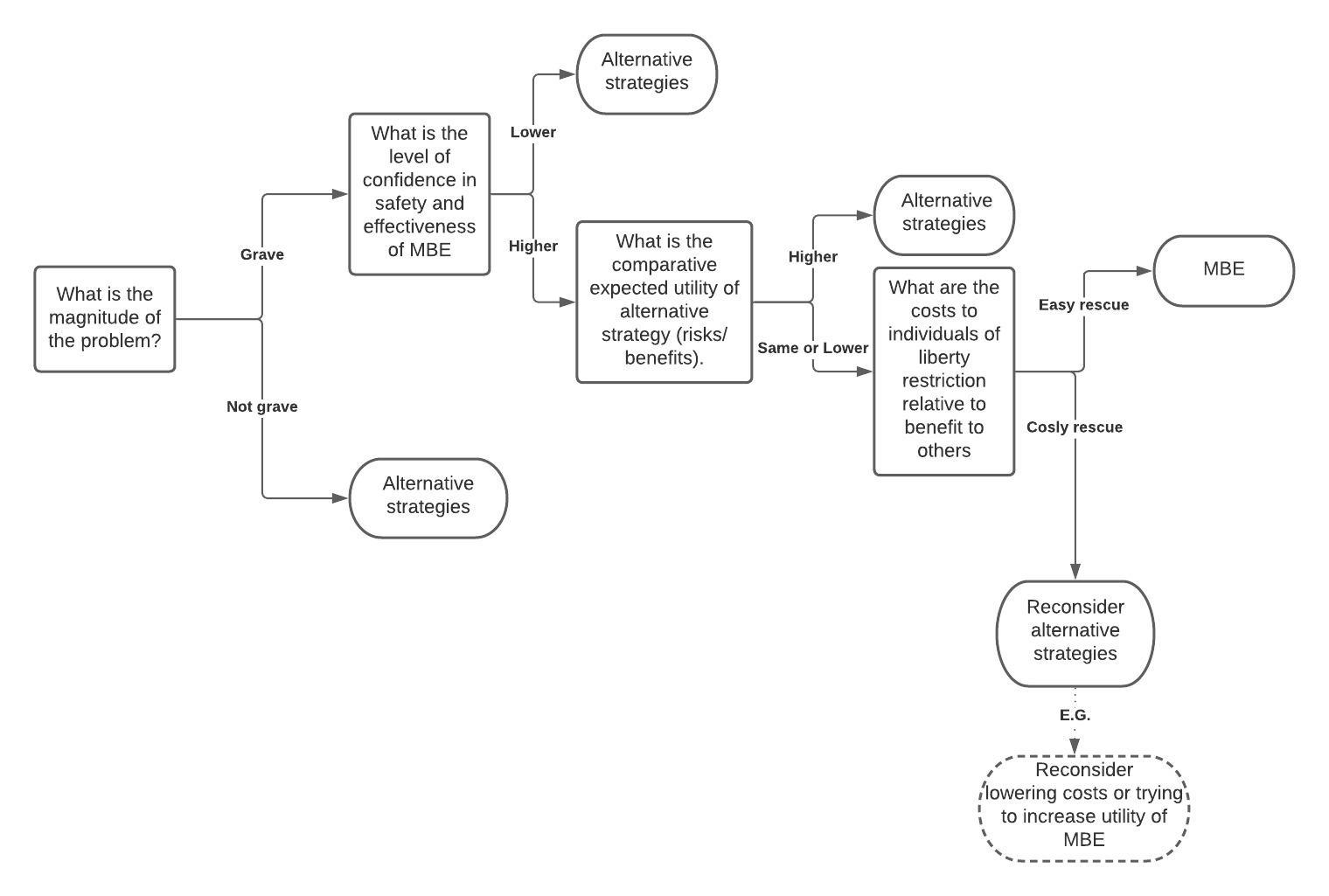

The following algorithm captures these features, making explicit the relevant factors:

Note that this applies to all moral enhancement, not only moral bioenhancement. It applies to any intervention that exacts a cost on individuals for the benefit of others. That is, when can the autonomy or well-being of one is compromised for the autonomy or well-being of others. This algorithm creates a decision procedure to answer that question.

Indeed, this algorithm was developed to answer the question, in the COVID pandemic: when should vaccination be mandatory?

But it applies to any social co-ordination problem when risks or harms must be imposed to achieve a social goal. Examples include public health meaures (e.g quarantine or vaccination), environmental policies (e.g. carbon taxes), taxation policies more generally, and others.

Can large harms (including, in extreme cases, death) ever be imposed on individuals to secure extremely large collective benefits (such as continued existence of humanity)? According to utilitarianism and many forms of consequentialism, it can be justified. But we need not answer this question to consider the moral justification for imposing small risks or harms for large collective benefits. We should all agree, whatever our religious or personal philosophical perspectives, that small risks or harms should be imposed for large social benefits (this is the second account below (absolute threshold)). After all, that is what justifies mandatory seat belt laws, speed limits and taxation. And it could justify mandatory moral enhancement, such as moral education, and moral bioenhancement, should that ever be possible, if the risks or harms were equivalent and the benefits as great.

Proportionality: Relative or Absolute?

One way to think about “easy rescue” is whether the proportionality of sacrifice to benefit should be relative or absolute? In a previous paper with Alberto Giubilini, Tom Douglas and Hannah Maslen, I discussed relative thresholds vs absolute thresholds. Peter Singer holds a relative threshold view which stipulate that large individual costs are justified when the benefits for others is proportionately larger. On a threshold account, there is un upper limit to the magnitude of the cost you can impose on individuals for the collective benefit, even if beyond that threshold the cost would be proportionate to the benefit. For example, on the relative account it would be permissible to impose death on an individual to save significantly more, because it is proportionate. Or extreme effective altruists might argue that you should give, say, 70% of your income to save people in a poverty-stricken country. On the absolute threshold account, the individual cost is not justified if it is above a certain threshold (so, for example, we could set the threshold much lower than the famous “kill one to save many” examples, even if it is relatively proportionate, because death is too large a cost for an individual).

Thanks to Alberto Giubilini for helpful comments

The basic idea of ‘moral bio-enhancement’ is distinctly misguided.

The way to increase moral structure is by scaling it up across larger numbers of people. And that is done with mechanisms and protocols, like the conventions and institutions of property, money, markets. Boosting empathetic instinct and such personal ‘moral enhancement’ cannot compete with that. This is an informational, computational problem, not a feeling problem.

The argument that we now live in much larger societies and our instincts no longer match up really makes just the opposite point to the one it intends to. It is the external artifice of social/regulatory machinery that has brought us here, and clearly *not* instinct.

What is the personal sentiment, the ‘disposition’, that would get food efficiently grown and distributed across national connections? There is not one. It is not a ‘disposition’ that does this, but a system of organisation. You do not solve the travelling salesperson problem, or distributed consensus problems by enhancing people’s feelings. You solve them at base with algorithmic techniques, and build them with social mechanisms/regulations/institutions/etc.

It cannot be an individual problem, because, where does the cohesion come from? Enhancing individual moral judgment will only produce coherent structure if everyone’s local choices align in some way, and not a simple way. It is not a matter of people feeling inclined to coordinate with others across the world, it is *how* to, how to organise, or get mechanisms that self-organise, that. That all seems to have been merely assumed, or rather missed, in the idea of ‘moral enhancement’, but it is the complexity of the coordination that is the very problem, not the inclination to it.

The question of large collective benefits suffers from ambiguity in its terms. How is the collective defined? This can lead to drastically different choices.

For example, the author claims that the continued existence of humanity is a large collective benefit. But from the perspective of currently existing people, this isn’t necessarily true. For example, if we had a technology that can give every person alive today a moderate benefit – say, their lifetime suffering is reduced by 20% and their lifetime happiness is increased by 20%, all else equal – but it came at the cost of destroying the world in 100 years. In this case, the largest collective benefit for the people alive today would be accomplished by using the technology and accepting that humanity won’t exist for more than 100 years.

The conflation of morality and biolology leads to category errors that are, quite honestly, verging on the infinite. For Savulescu morality is essentially nothing more than a set of cultural beliefs enlivened by our biology, and as such has no basis outside of our own brains. Yet somehow he’s marshalling moral arguments to describe our own moral incapability and need for ‘bioenhancement’. Morality, for him, is permenently moving, but not forward, just in general–lurching around without being directed towards any final goal or aim. My speculation is that the reason the notion of bioenhancement have gained some prominence within medical ethics is that it coincides with the growth of the “sanitary state” (the sucessor of the security state).

Pedro’s speculative comment appears exclusive – in the sense that the main approach being documented in the article is one which clearly emanates from the caring sector, and is attempting to deal with experienced tensions. Yet to use such a freely but narrowly defined worldview from a smaller subset of social groups and project that as a mandatory requirement for a larger and broader social grouping, does lead to a narrowing set of ethical rules for society as a whole, which appears to partially be where Pedro’s criticism arises. If a more limited approach to the article is taken, for truly exceptional circumstances (a great deal worse than the current pandemic), provided more humanity is applied within the decision process or to outcomes, the cold logic makes more sense. It is almost as if Pedro is caught within the ‘is’ rather than viewing the article as presenting a different ‘as’.

Looked at more widely the blog articles type of documented circumstantial need for a reduction in views and mandatory individual sacrifice normally seems to appear together with the availability or perception of a greater level or tighter control/influence of a larger social grouping and hence could be understandable in that context. Less potential of direct control/influence should ordinarily result in differing attempts at adaptation to any felt tensions by the smaller group. And that appears to be at the crux of the blog article because a more complete control of any social situation is being freely argued for which would paradoxically leave individuals with less ability to themselves express truly ethical choices. But would serve to justify the proffered ethics.

Harrisons comment fails to consider if this could be where many social groups ethical frameworks often fail, in that they do not structure their own codes within that broader environment (or social grouping) in which they exist and hence require confidentiality or secrecy mechanisms to be able to function in the way they wish. Many very costly corporate ethical failures would seem to indicate that type of scenario. To say that social groups (politics or/and commerce) is not made up from individuals who exercise their own innate morality/ethical code at some level is to fall into the same trap documented here.

Similar situations are visible across much of the UK at the moment where conflicts in ideology are driving movements in power structures to suit different groupings with the ethics of the outcomes being a focus and the ethics of the endeavours to achieve the outcomes frequently being ignored, which quite fits mythical statements like ‘the ends are more important than the means’. Quite a strange ethically paradoxical misconception when you think about it and contrast it against more broadly construed and strictly logical views like those of Kant on this very point.

Comments are closed.