By S. Tom de Kok

Artificial Intelligence (AI) in healthcare promises to revolutionize diagnostics, treatments, and efficiency, but it is not infallible. What happens when these promises are accompanied by harms that are difficult to define, attribute, or address? The term AI-trogenic harm—a novel term for the unintentional harm caused by artificial intelligence (AI) in healthcare—could provide a unique framework for identifying, understanding, and addressing these often-elusive risks. It is not currently in use, but it should be.

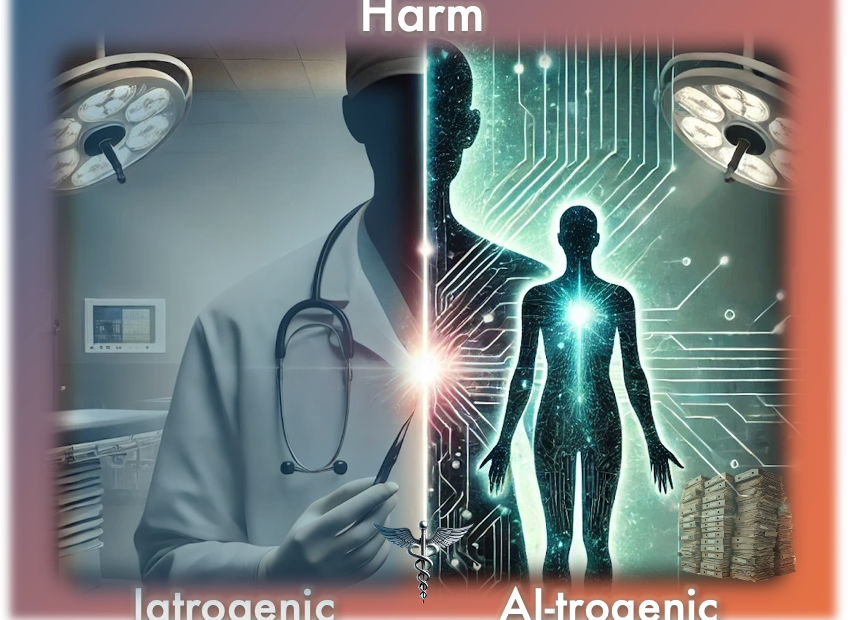

As AI becomes more deeply integrated into healthcare, a foundational question arises: how can we ensure that these innovations do not inadvertently harm the very patients they intend to serve? The challenge is not simply one of exercising caution, but in understanding how to implement it effectively. The nonmaleficence principle—‘first, do no harm’—lies at the heart of medical ethics. When medicine falls short of this standard, we call the result iatrogenic harm: preventable injury or damage that occurs within healthcare’s own walls. Over decades, this concept has shaped protocols, informed patient safety measures, and spurred reflection on the unintended consequences of well-intentioned medical practices, including the overmedicalization of society. Yet, the emergence of AI tests this principle at its core, forcing us to confront novel forms of harm that are not easily attributable to a single actor and cannot always be traced to an obvious decision point.

Traditionally, iatrogenic harm assumes direct human intervention: surgeons slip, clinicians misdiagnose, and device manufacturers sometimes release tools prone to dangerous malfunctions. But AI complicates these assumptions. Operating with degrees of autonomy and opacity, it challenges our ability to pinpoint who—or what—is responsible when an error occurs. The problem extends beyond a faulty diagnosis or a single mishap; it includes the difficulty of attributing harm, understanding AI’s reasoning processes, and safeguarding fundamental patient rights like autonomy and privacy. Unlike iatrogenic harm, which often maps to a discrete clinical act by a directly accountable individual, AI-trogenic harm emerges from intertwined technological, institutional, and cultural factors, dispersing responsibility and challenging traditional frameworks of blame. AI-trogenic harm anticipates the possibility that AI-driven harms may not be isolated incidents, but rather interconnected complexes of issues that redefine our ethical landscape.

The urgency for clearer concepts may sound academic, yet it is anything but abstract. Consider the recent EU AI Act, crafted to safeguard citizens against AI-related risks. It has been criticized for its vague definition of “harm,” a conceptual ambiguity that may undermine its effectiveness.[1][2] In response, the European Commission has launched consultations—efforts to define the terms surrounding harm more clearly by 2026 when it comes into full effect.[3] The aim is for regulators, developers, and citizens to share a common ethical language. Philosophers voice similar concerns in medical ethics: if we cannot articulate AI-related harms clearly, we risk confusion, inconsistent accountability, and ultimately an inability to shield patients from harm.[4][5] It is noteworthy that a systematic review of AI in healthcare ethics found the “principle of prevention of harm was the least explored topic.”[6]

Take the case of the Optum Health Care Prediction Algorithm, which aimed to be race-neutral, predicting patients with complex conditions who would benefit from preventive care. Instead, by using biased spending data, it overlooked more than half of those Black patients with complex conditions.[7][8] When exposed, public debate centered on accountability and racial bias[9]—yet this focus strangely ignored the directly affected individuals. Moreover, perpetuating such injustice contributes incrementally to the erosion of trust in healthcare AI—something that may accumulate slowly, but ultimately harms the foundation of patient-clinician relationships. Autonomy and consent were also compromised, as patients remained largely unaware of how their data was used or how conclusions were drawn. In this multifaceted environment—where harm occurs at individual, systemic, and even conceptual[10] levels—AI-trogenic harm can serve as a crucial overarching term that acknowledges these interdependencies, rather than treating instances as a single type of harm or isolated error.

1. Criticism: The Charge of Unwarranted Caution

Recognizing such layered harms could raise a broader concern: would a concept like AI-trogenic harm chill innovation unnecessarily, or could stepping back to reflect instead enable more responsible innovation?

The normative debate about how to regulate AI has a wide range of positions. On one side are advocates of the Precautionary Principle, urging vigilance and stricter regulation when the outcomes of novel technology remain uncertain. On the other stand techno-exceptionalists, who argue that AI’s transformative potential justifies minimal intervention, trusting that benefits will outweigh the risks. Both camps agree that errors occur but disagree on how to respond. Regulations like Europe’s GDPR or the U.S. HIPAA protect privacy; however, other areas—such as resource allocation injustice or algorithmic biases—remain less legislatively protected. Should we impose more stringent rules, or let innovation proceed freely?

Here, introducing AI-trogenic harm does not force a binary choice. While it makes ignoring interconnected risks harder, it does so by clarifying what those risks are, not by mandating a particular policy outcome. With a precise term that highlights the complex, layered nature of AI-related injuries, we can engage in more informed discussions about accountability, oversight, and acceptable levels of risk. Whether one leans toward precaution or permissiveness, shared conceptual ground ensures that disagreements turn on substantive points rather than fuzzy definitions.

A techno-exceptionalist might initially shrug off such harms as mere teething problems on a path to greater efficiency or safety. But with AI-trogenic harm explicitly identified, it becomes evident that these are not random defects easily patched; they can be entrenched patterns reflecting cultural biases, institutional priorities, and opaque design decisions. Recognizing this pushes the techno-exceptionalist to justify why these systemic harms should be tolerated or to propose mechanisms that let innovation flourish without entrenched injustice. On the other hand, a pro-precaution voice, using the concept of AI-trogenic harm, can move beyond generic caution to advocate for specific safeguards—like mandatory bias audits, transparent model documentation, or stakeholder consultations—that address the structures producing harm. At the same time, acknowledging AI-trogenic harm also encourages techno-exceptionalists to develop creative solutions that preserve innovation while mitigating harm—balancing the urgency of ethical concerns with the drive to deliver transformative medical benefits.

Still, from a utilitarian perspective, one might argue that harms like Optum’s are regrettable but inevitable costs of progress. While initial responses from Optum emphasized future model improvements rather than acknowledging the immediate harm, one must ask why these socioeconomic factors were not integrated from the outset.[11] Under this view, attempts to prevent such issues upfront could slow the rollout of potentially life-saving technologies, perhaps causing greater harm in aggregate. AI-trogenic harm, however, does not deny the reality of iterative improvement; it simply ensures we understand what we are trading off when we rely on trial-and-error. It highlights that these ‘growing pains’ are not neutral side-effects but ethically significant patterns of harm that deserve scrutiny. By naming them, we encourage developers and policymakers to anticipate and minimize these harms proactively, rather than merely tolerating them as the price of progress.

2. Criticism: Aren’t Existing Concepts Enough?

Others might contend that we already possess a detailed ethical toolbox. Philosopher Brent Mittelstadt and others, for example, outline categories of ethical concern and their roots—ranging from inconclusive or inscrutable evidence to misguided data, unfair outcomes, transformative effects, and traceability problems[12]. Other comprehensive schemas have been used to organize the unique risks that AI poses as well. While these distinctions identify specific trouble spots, they also risk implying that each harm stands alone, obscuring the systemic interplay of factors that produce complex harm ecosystems. In reality, this interplay is intricate, reflecting AI’s systemic nature—an idea that could benefit from deeper philosophical defense, a task I aim to undertake in my master’s thesis, where I will explore the inter-connected character of AI-induced harms in greater detail.

In the Optum case, bias, lack of transparency, and compromised autonomy do not merely add up to a list of separate harms. These are important but become more troubling when coupled with the seeming lack of accountability. Instead, their interaction forms a ‘harm complex’—an emergent phenomenon where intertwined issues create a new ethical terrain. Patients aren’t just harmed by bias or by opacity alone; they experience a combined effect that alters trust, skews care and reshapes their relationship with the healthcare system. This synergy of harms resists simple fixes: improving transparency without addressing bias might not restore trust, and eliminating one source of unfairness may still leave underlying cultural or institutional factors untouched. AI-trogenic harm is about recognizing these emergent harm landscapes. It acknowledges that we cannot treat AI’s ethical challenges as isolated glitches in need of discrete patches; we must approach them as evolving ecosystems of interdependent risks requiring integrated, systemic responses.

Another part of the ethical toolbox are prevailing terms like “AI-related healthcare risk” or “AI medical error” that frame problems as discrete, technical glitches. This framing implies that fixing a snippet of code or adjusting a data pipeline might suffice. But AI-induced harms often manifest through cascading effects: for example, biases erode trust, eroded trust reduces patient engagement, diminished engagement leads to poorer data, and poorer data can worsen biases. Similar compounding harm has been highlighted between privacy, clinician autonomy, inexplicability or public accountability, where one weakness magnifies another’s impact.[13][14] Of course, not all harms form neat feedback loops, but highlighting such scenarios underscores how partial fixes can fail if we overlook broader systemic dynamics. Such conceptual refinement is especially crucial in healthcare, where “first, do no harm” is a foundational moral stance. Failing to acknowledge the complexity of AI-induced harms, thereby allowing networks of harm to remain hidden behind technical opacity, runs counter to that principle.

Facing Criticism #3: Why Not Just Use Iatrogenesis?

Even if one concedes the need for conceptual innovation, another critique might arise: what does AI-trogenic harm add that iatrogenic harm does not already cover? Iatrogenesis has long guided our understanding of unintended harm in medicine, usually arising from a clear human action—like a surgical error or a prescribing mistake. The fundamental justification for not reusing iatrogenic harm, is that it often maps to a discrete clinical act by a known party, whereas AI-trogenic harm emerges from complex, evolving patterns in data, code, and institutional practice—dispersing responsibility and defying the simple attribution that iatrogenic frameworks rely upon.

Yet, the value of a new term can seem elusive until one considers other examples. This is the field of conceptual ethics, the reflective study of our moral and political vocabularies, which is a two-step process to “first describe deficiencies and then develop ameliorative strategies.”[15]

Consider the introduction of “sexual harassment” as a term in the 1970s. It not only named a wrong but also transformed inarticulate grievances into recognized injustices, prompting meaningful reforms.[16][17]Similarly, the concept of a carbon footprint influenced environmental discourse by encouraging consumer awareness of individual impacts. However, it also revealed how language can be co-opted for strategic ends—crafted by a PR firm for BP to deflect blame from large polluters.[18] More recent technological innovation has also seen the engineering of concepts to better define harms. Before “cyberbullying” was coined, online harassment lacked a specific category, leaving victims vulnerable. “Revenge porn” and “fake news” likewise pinpointed harms previously poorly understood, spurring targeted legal and social remedies.

AI-trogenic harm follows this logic, highlighting a ‘third’ responsibility zone—neither purely technical nor solely human. Unlike iatrogenic harm, which generally assumes a directly accountable healthcare provider, AI-trogenic harm encompasses emergent patterns of systemic bias, opaque design, and cultural assumptions embedded in technology. Traditional frameworks falter here: who is liable when an algorithm learns a harmful pattern from historical data? How do we assign accountability when harms stem from a confluence of data preprocessing, model architecture, and institutional incentives?

By offering a term that captures the distinct ethical profile of AI-related harm, we acknowledge that neither classic iatrogenic models nor standard “technical error” labels suffice. AI-trogenic harm opens a conceptual space where we can debate new forms of responsibility, novel regulatory approaches, and the moral significance of algorithmic agency, or pseudo-agency.

Conclusion: The Distinct Ethical Terrain of AI in Healthcare

Merely introducing a term like AI-trogenic harm does not, by itself, magically resolve these ethical challenges posed by AI. Overstating the power of a term would be as unwise as underestimating its conceptual traction. Words alone cannot enforce policies, correct biased algorithms, or restore lost trust. Yet, as conceptual ethics shows, the right terms shape the discourse that determines how we address these challenges.

Rather than simply calling for more oversight, AI-trogenic harm encourages us to reimagine our ethical infrastructure: Should we require routine bias screening of algorithms before deployment? Must we establish community panels to oversee data use? Preventing the Optum case, would require structural interventions like these and ultimately a framework that holds developers and institutions accountable in novel ways for long-term patterns of harm. Without a name for the complexity of this AI-induced harm, it risks remaining invisible, misdiagnosed, misunderstood, or worst of all, being perceived as inevitable.

Just as “sexual harassment” or “cyberbullying” once lacked precise articulation and are now better understood and regulated, so too can AI-trogenic harm become a recognized category. Iatrogenic harm is a common term in medical schools. Maybe we need to be teaching both. This recognition is meta-ethical rather than strictly normative—by identifying conceptual gaps and proposing new terms, we enable future debates and policies to refine and potentially redefine the meaning of AI-trogenic harm over time. The definitions may shift as our moral understanding and technological contexts evolve, much like how iatrogenic harm’s scope has been debated and expanded. By accepting this fluidity, we acknowledge that conceptual ethics does not fix meanings permanently but invites ongoing dialogue and adaptation.

Recognizing AI-trogenic harm as a distinct category influences how we think. While concepts do not write laws, they can influence the depth and precision of debates that shape legislation. While they do not debug code, they can guide developers to adopt ethically sound design principles. They cannot guarantee trust, but they clarify where trust fractures and suggest avenues for its restoration. Ultimately, AI-trogenic harm makes clear that the integration of AI in healthcare entails a profound shift in how care is delivered, how decisions are made, and consequently how trust might be earned, maintained, or lost.

As I plan to explore these issues further in my thesis, I welcome commentary on overlooked perspectives, additional criticisms, and any insights that might deepen our collective understanding of this emerging ethical landscape.

[1] Organisation for Economic Co-operation and Development (OECD). (2024). Vague concepts in the EU AI Act will not protect citizens from AI manipulation. OECD AI Policy Observatory.

[2] European Parliamentary Research Service. (2021). Artificial intelligence act: EU legislative framework for AI. European Parliament.

[3] European Commission. (2024, November 13). Commission launches consultation on AI Act prohibitions and AI system definition. European Commission – Digital Strategy.

[4] Tang, Lu, Jinxu Li, and Sophia Fantus. “Medical Artificial Intelligence Ethics: A Systematic Review of Empirical Studies.” DIGITAL HEALTH 9 (2023)

[5] Solanki, Pravik, John Grundy, and Waqar Hussain. “Operationalising Ethics in Artificial Intelligence for Healthcare: A Framework for AI Developers.” Ai and ethics (Online) 3.1 (2023): 223–240. Web.

[6] Karimian, Golnar, Elena Petelos, and Silvia M. A. A Evers. “The Ethical Issues of the Application of Artificial Intelligence in Healthcare: A Systematic Scoping Review.” Ai and ethics (Online) 2.4 (2022): 539–551.

[7] Johnson, Carolyn Y. “Racial Bias in a Medical Algorithm Favors White Patients over Sicker Black Patients.” The Washington post (Washington, D.C. 1974. Online) 2019

[8] Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. *Science*, 366(6464), 447–453.

[9] Paul, K. (2019). Healthcare algorithm used across America has dramatic racial biases. The Guardian. October, 25.

[10] By conceptual harm I mean to indicate phenomena like the distortion of healthcare values or principles, the erosion of patient autonomy as an ideal, or the shifting moral landscape where responsibilities and accountabilities become unclear. It’s about the frameworks and conceptual tools we use to navigate ethical issues, not just the end harm itself.

[11] Johnson, Carolyn Y. “Racial Bias in a Medical Algorithm Favors White Patients over Sicker Black Patients.” The Washington post (Washington, D.C. 1974. Online) 2019

[12] Mittelstadt, Brent et al. “The Ethics of Algorithms: Mapping the Debate.” (2016)

[13] European Parliament. (2020). The ethics of artificial intelligence: Issues and initiatives. European Union. https://www.europarl.europa.eu/

[14] Floridi, Luciano et al. “AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations.” Minds and machines (Dordrecht) 28.4 (2018): 689–707

[15] Cappelen, H. (2020). Conceptual engineering: The master argument. In A. Burgess, H. Cappelen, & D. Plunkett (Eds.), Conceptual engineering and conceptual ethics

[16] Taub, Nadine. “Sexual Shakedown: The Sexual Harassment of Women on the Job (Book Review).” Women’s Rights Law Reporter 1979: 311–316.

[17] Fricker, Miranda. Epistemic Injustice: Power and the Ethics of Knowing. 1st ed. Oxford: Oxford University Press, 2007.

[18] Solnit, R. “Big oil coined ‘carbon footprints’ to blame us for their greed. Keep them on the hook.” The Guardian, 2021.

The word/adjective “iatrogenic” can be a little bit confusing because the definition are various. Iatrogenic means “to cause by physician/health care worker”. But this word automatically doesn’t mean that the health care worker is liable for the harm or he/she provided the health care wrongly. E. g. during the blood taken the big bruise is caused by the needle. So that means the bruise was caused “iatrogenicly”. But it is natural risk of such health care performance. There was no malpractice but despite this the bruise developed. The same case is e. g. hair lost during chemotherapy. It is also caused “iatrogenicly”. And also the same principles are valid for AI. It it was used correctly but despite this the care failed, there is no liability of health care provider. But if it was used in wrong way (e. g. there were wrong technique, the care was not indicated etc.) there will be liability for the harm caused.

Thank you for clarifying that iatrogenic harm does not always imply liability. Undoubtedly, AI-trogenic harms will also fall into liable and non-liable categories—but figuring out which is which isn’t so simple in AI-infused healthcare, where “responsibility gaps” abound. As you noted, some harms—like a bruise from a blood draw or hair loss from chemotherapy—are considered inherent risks rather than mistakes. The same principle holds for AI, where unintended harm can arise even when the system is used correctly and no negligence is involved. This nuance underlines why AI-trogenic harm is conceptually significant—or maybe even fascinating.

Ivan Illich stretched the concept of iatrogenesis to cover systemic issues—like “iatrogenic poverty” and medical overreach. AI could unleash equally diffuse harms; as it becomes more enmeshed in healthcare, obvious errors may indeed decline, yet subtler systemic risks could proliferate.

Consider an AI tool that flags a symptom incorrectly, prompting an unnecessary injection and leaving the patient with nothing but a sore bruise. Should we call that negligence? And if so, whose? The developer’s for not training the algorithm properly, or the clinician’s for blindly relying on it? Or is this just one of those “inherent risks,” akin to standard bruising from a blood draw? Even if it’s not liability, as you note is possible, there could be moral responsibility—either blameworthy for failing to foresee or prospectively accountable for preventing such mishaps from becoming routine.

Brushing off these “minor” harms risks normalizing preventable discomfort and ignoring real opportunities to improve AI design and clinical oversight. Should we consider reparations or a formal process for improvement, rather than waving off bruising as statistical inevitability? And what if historically marginalized groups disproportionately suffer these “non-negligent” harms? We already grapple with systemic harm in traditional healthcare. Letting AI magnify such harm seems indefensible—especially when healthcare AI is in its early stages. This is precisely the moment to make sure “responsibility-laundering” does not take hold. Like Iatrogenic harm, AI-trogenic harm is bound to encompass both legally actionable mistakes and unavoidable side effects.

Given your point that the iatrogenic label doesn’t automatically mean liability, do you think AI-trogenic harm is conceptually unnecessary because iatrogenic harm suffices?—or do opaque decision processes, accountability blind spots, and the “problem of many hands” demand separate conceptual clarity? Even if you consider it premature now, how might shifting technological capabilities, regulatory landscapes, and philosophical consensus change the calculus in the future? I’d be interested in your perspective.

Thanks for your answer.

Let us consider these questions through the perspective of the recent legal provisions.

In my country the Civil Code that was also applied on the medicine malpractice cases stated that if there is any harm caused by “the characteristics” (this word is important) of the any instruments or the medical devices used within the health care the provider (hospital, physician) is liable for such harm. E. g. during the endoscopy the endoscop causes the harm on the pacient’s internal organs (typically the gullet perforation).

According the courts the mentioned article of the Civil Code should be applied on such case and the health care provider was liable despite the fact that the physician did not make any mistake. In other words the medical device was used and just this fact itself means the liability of the health care provider for the harm. This broad interpretation favouring the pacient’s rights probably was not originally presumed by the legislator. But the judicature just developed in this way.

Yet this article of the Civil Code was cancelled in 2014. The recent Civil Code states that the health care provider is liable only in two situations. First if there is malpractice (i. e. incorrect performance, not proper technique, the performance violating the mecial science rules, not indicated health care etc.). Second the health care provider is responsible if the used medical device is defective (therefore not the characteristics of the device but only its defectiveness establishes the provider’s liability).

So if we consider AI to be a medical device according to the provisions mentioned above the health care provider should be liable only if AI was used incorrectly or AI device itself was defective.

However according to AI Act that is recently being prepared by EU and should be effective up to 2026 there is a health care provider’s culpabililty presumption if the AI device is used. I. e. the liability of the health care provider is presumed automatically unless the provider proves that the cause of harm stays completely out of AI device or the harm is caused by the other circumstances that the provider was not able to influence. It reminds rather the above mentioned article of the Civil Code that formerly established/justified the provider’s liability exclusively on the fact that AI was used.

So I think there will be this road: the provider’s liability for the harm caused by AI unless it is proved (by the provider) that the harm was caused by the different factors than AI. The liable health care provider will be subsequently allowed to claim the compensation to AI device producer.

Interesting take on applying iatrogenic harm to medical AI contexts. Regarding the question of whether we should impose strict rules or let innovation take its cause, perhaps we should not think of regulation as being at the expense of innovation instead see regulation, especially in high risk sectors such as healthcare, as an enabler of innovation and necessary for safe and effective medical AI adoption.

Early in this blog you mention nonmaleficence. I read this as clinicians have a moral obligation to prevent harm to their patients (and maybe even non-patients). As the moniker suggests, moral obligations are things that are both good and required to do. In the case of iatrogenic harm, it seems obvious that clinicians that did not take steps to limit harms to patients by colleagues would fail to meet their moral obligations. But as you make clear in “Criticism 1” it is not always clear what AI-trogenic harms are, how severe and pervasie they are, and if these devices eventually deliver superior care, whether AI-trogenic harm will be worth it in the long run. I’m wondering if the concept of moral obligation can provide some insight here. What should we say about a clinician who witnesses–or even suspects–some AI-trogenic harm is taking place and does nothing? If we don’t think clinicians who sit on the sidelines are blameworthy, maybe the AI-trogenic harms really aren’t that severe. Conversely, maybe they are blameworthy. If so, how much blame should be attributed to them, and what steps need to be taken to account for their shortcomings?

*interesting concept. Let me know if you introduce this in a formal publication. I’d like to read more about it, and maybe even mention it in my own work.

Comments are closed.