Guest Post: High Risk, Low Reward: A Challenge to the Astronomical Value of Existential Risk Mitigation

Written by David Thorstad , Global Priorities Institute, Junior Research Fellow, Kellogg College

This post is based on my paper “High risk, low reward: A challenge to the astronomical value of existential risk mitigation,” forthcoming in Philosophy and Public Affairs. The full paper is available here and I have also written a blog series about this paper here.

Derek Parfit (1984) asks us to compare two scenarios. In the first, a war kills 99% of all living humans. This would be a great catastrophe – far beyond anything humanity has ever experienced. But human civilization could, and likely would, be rebuilt.

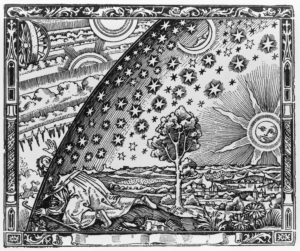

In the second scenario, a war kills 100% of all living humans. This, Parfit urges, would be a far greater catastrophe, for in this scenario the entire human civilization would cease to exist. The world would perhaps never again know science, art, mathematics or philosophy. Our projects would be forever incomplete, and our cities ground to dust. Humanity would never settle the stars. The untold multitudes of descendants we could have left behind would instead never be born.Read More »Guest Post: High Risk, Low Reward: A Challenge to the Astronomical Value of Existential Risk Mitigation