How Does Social Media Pose a Threat to Autonomy?

Undergraduate Finalist paper in the 2025 National Uehiro Oxford Essay Prize in Practical Ethics. By Rahul Lakhanpaul, University of Edinburgh.

Undergraduate Finalist paper in the 2025 National Uehiro Oxford Essay Prize in Practical Ethics. By Rahul Lakhanpaul, University of Edinburgh.

Written by Muriel Leuenberger

A modified version of this post is forthcoming in Think edited by Stephen Law.

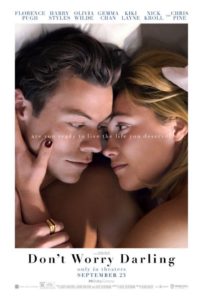

Spoiler warning: if you want to watch the movie Don’t Worry Darling, I advise you to not read this article beforehand (but definitely read it afterwards).

One of the most common reoccurring philosophical thought experiments in movies must be the simulation theory. The Matrix, The Truman Show, and Inception are only three of countless movies following the trope of “What if reality is a simulation?”. The most recent addition is Don’t Worry Darling by Olivia Wilde. In this movie, the main character Alice discovers that her idyllic 1950s-style housewife life in the company town of Victory, California, is a simulation. Some of the inhabitants of Victory (most men) are aware of this, such as her husband Jack who forced her into the simulation. Others (most women) share Alice’s unawareness. In the course of the movie, Alice’s memories of her real life return, and she manages to escape the simulation. This blog post is part of a series of articles in which Hazem Zohny, Mette Høeg, and I explore ethical issues connected to the simulation theory through the example of Don’t Worry Darling.

One question we may ask is whether living in a simulation, with a simulated and potentially altered body and mind, would entail giving up your true self or if you could come closer to it by freeing yourself from the constraints of reality. What does it mean to be true to yourself in a simulated world? Can you be real in a fake world with a fake body and fake memories? And would there be any value in trying to be authentic in a simulation?

by Anders Sandberg – Future of Humanity Institute, University of Oxford

by Anders Sandberg – Future of Humanity Institute, University of Oxford

Is there a future for humans in art? Over the last few weeks the question has been loudly debated online, as machine learning did a surprise charge into making pictures. One image won a state art fair. But artists complain that the AI art is actually a rehash of their art, a form of automated plagiarism that threatens their livelihood.

How do we ethically navigate the turbulent waters of human and machine creativity, business demands, and rapid technological change? Is it even possible?

Read More »Reflective Equilibrium in a Turbulent Lake: AI Generated Art and The Future of Artists

Written by: Anantharaman Muralidharan, G Owen Schaefer, Julian Savulescu

Cross-posted with the Journal of Medical Ethics blog

Consider the following kind of medical AI. It consists of 2 parts. The first part consists of a core deep machine learning algorithm. These blackbox algorithms may be more accurate than human judgment or interpretable algorithms, but are notoriously opaque in terms of telling us on what basis the decision was made. The second part consists of an algorithm that generates a post-hoc medical justification for the core algorithm. Algorithms like this are already available for visual classification. When the primary algorithm identifies a given bird as a Western Grebe, the secondary algorithm provides a justification for this decision: “because the bird has a long white neck, pointy yellow beak and red eyes”. The justification goes beyond just a description of the provided image or a definition of the bird in question, and is able to provide a justification that links the information provided in the image to the features that distinguish the bird. The justification is also sufficiently fine grained as to account for why the bird in the picture is not a similar bird like the Laysan Albatross. It is not hard to imagine that such an algorithm would soon be available for medical decisions if not already so. Let us call this type of AI “justifying AI” to distinguish it from algorithms which try, to some degree or other, to wear their inner workings on their sleeves.

Possibly, it might turn out that the medical justification given by the justifying AI sounds like pure nonsense. Rich Caruana et al present a case whereby asthmatics were deemed less at risk of dying by pneumonia. As a result, it prescribed less aggressive treatments for asthmatics who contracted pneumonia. The key mistake the primary algorithm made was that it failed to account for the fact that asthmatics who contracted pneumonia had better outcomes only because they tended to receive more aggressive treatment in the first place. Even though the algorithm was more accurate on average, it was systematically mistaken about one subgroup. When incidents like these occur, one option here is to disregard the primary AI’s recommendation. The rationale here is that we could hope to do better than by relying on the blackbox alone by intervening in cases where the blackbox gives an implausible recommendation/prediction. The aim of having justifying AI is to make it easier to identify when the primary AI is misfiring. After all, we can expect trained physicians to recognise a good medical justification when they see one and likewise recognise bad justifications. The thought here is that the secondary algorithm generating a bad justification is good evidence that the primary AI has misfired.

The worry here is that our existing medical knowledge is notoriously incomplete in places. It is to be expected that there will be cases where the optimal decision vis a vis patient welfare does not have a plausible medical justification at least based on our current medical knowledge. For instance, Lithium is used as a mood stabilizer but the reason why this works is poorly understood. This means that ignoring the blackbox whenever a plausible justification in terms of our current medical knowledge is unavailable will tend to lead to less optimal decisions. Below are three observations that we might make about this type of justifying AI.

After healthcare and some other essential workers, it might seem the most obvious candidates for a Covid-19 vaccine (if we have one) are the elderly and other groups that are more vulnerable to the virus. But Alberto Giubilini argues that prioritising children may be a better option as this could maximise the benefits of indirect… Read More »Video Series: Covid-19 Who Should Be Vaccinated First?

Are contact tracing apps safe? Dr Carissa Véliz (Oxford), author of ‘Privacy is Power’, explains why we should think twice about using such apps. They pose a serious risk to our privacy, and this matters, even if you think you have nothing to hide!

Written by Stephen Rainey

It is often claimed, especially in heated Twitter debates, that one or other participant is entitled to their opinion. Sometimes, if someone encounters a challenge to their picture of the world, they will retort that they are entitled to their opinion. Or, maybe in an attempt to avoid confrontation, disagreement is sometimes brushed over by stating that whatever else may be going on, everyone is entitled to their opinion. This use of the phrase is highlighted in a recent piece in The Conversation. There, Patrick Stokes writes,

The problem with “I’m entitled to my opinion” is that, all too often, it’s used to shelter beliefs that should have been abandoned. It becomes shorthand for “I can say or think whatever I like” – and by extension, continuing to argue is somehow disrespectful.

I think this is right, and a problem well identified. Nevertheless, it’s not like no one, ever, is entitled to an opinion. So when are you, am I, are we, entitled to our opinion? What does it take to be entitled to an opinion?

Written by Stephen Rainey, and Jason Walsh

Rhetoric about free speech as under attack is an enduring point of discussion across the media. It appears on the political agenda, in various degrees of concreteness and abstraction. By some definitions, free speech amounts to an unrestrained liberty to say whatever one pleases. On others, it’s carefully framed to exclude types of speech centrally intended to cause harm.

At the same time, more than ever the physical environment is a focus of both public and political attention. Following the BBC’s ‘Blue Planet Two’ documentary series, for instance, a huge impetus gathered around the risk of micro-plastics to our water supply, and, indeed, how plastics in general damage the environment. As with many such issues people have been happy to act. Following, belatedly, Ireland’s example, plastic bag use has plummeted in the UK, helped along by the introduction of a tax.

There are always those few who just don’t care but, when it comes to our shared natural spaces, we’re generally pretty good at reacting. Be it taxing plastic bags, switching to paper straws, or supporting pedestrianisation of polluted areas, there is the chance for open conversations about the spaces we must share. Environmental awareness and anti-pollution attitudes are as close to shared politics as we might get, at least in terms of what’s at stake. Can the same be said for the informational environment that we share?Read More »Listen Carefully

Written by Rebecca Brown

Last month, one of the largest music streaming services in the world, Spotify, announced a new ‘hate content and hateful conduct’ policy. In it, they state that “We believe in openness, diversity, tolerance and respect, and we want to promote those values through music and the creative arts.” They condemn hate content that “expressly and principally promotes, advocates, or incites hatred or violence against a group or individual based on characteristics, including, race, religion, gender identity, sex, ethnicity, nationality, sexual orientation, veteran status, or disability.” Content that is found to fulfil these criteria may be removed from the service, or may cease to be promoted, for example, through playlists and advertisements. Spotify further describe how they will approach “hateful conduct” by artists:

We don’t censor content because of an artist’s or creator’s behavior, but we want our editorial decisions – what we choose to program – to reflect our values. When an artist or creator does something that is especially harmful or hateful (for example, violence against children and sexual violence), it may affect the ways we work with or support that artist or creator.

An immediate consequence of this policy was the removal from featured playlists of R. Kelly and XXXTentacion, two American R&B artists. Whilst the 20 year old XXXTentacion has had moderate success in the US, R. Kelly is one of the biggest R&B artists in the world. As a result, the decision not to playlist R. Kelly attracted significant attention, including accusations of censorship and racism. Subsequently, Spotify backtracked on their decision, rescinding the section of their policy on hateful conduct and announcing regret for the “vague” language of the policy which “left too many elements open to interpretation.” Consequently, XXXTentacion’s music has reappeared on playlists such as Rap Caviar, although R. Kelly has not (yet) been reinstated. The controversy surrounding R. Kelly and Spotify raises questions about the extent to which commercial organisations, such as music streaming services, should make clear moral expressions.

Read More »Music Streaming, Hateful Conduct and Censorship

Hazem Zohny and Julian Savulescu

Cross-posted with the Oxford Martin School

Developing AI that does not eventually take over humanity or turn the world into a dystopian nightmare is a challenge. It also has an interesting effect on philosophy, and in particular ethics: suddenly, a great deal of the millennia-long debates on the good and the bad, the fair and unfair, need to be concluded and programmed into machines. Does the autonomous car in an unavoidable collision swerve to avoid killing five pedestrians at the cost of its passenger’s life? And what exactly counts as unfair discrimination or privacy violation when “Big Data” suggests an individual is, say, a likely criminal?

The recent House of Lords Artificial Intelligence Committee’s report acknowledges the centrality of ethics to AI front and centre. It engages thoughtfully with a wide range of issues: algorithmic bias, the monopolised control of data by large tech companies, the disruptive effects of AI on industries, and its implications for education, healthcare, and weaponry.

Many of these are economic and technical challenges. For instance, the report notes Google’s continued inability to fix its visual identification algorithms, which it emerged three years ago could not distinguish between gorillas and black people. For now, the company simply does not allow users of Google Photos to search for gorillas.

But many of the challenges are also ethical – in fact, central to the report is that while the UK is unlikely to lead globally in the technical development of AI, it can lead the way in putting ethics at the centre of AI’s development and use.

Read More »Ethical AI Kills Too: An Assement of the Lords Report on AI in the UK