Written by Cristina Voinea

This blogpost is a prepublication draft of an article forthcoming in THINK

Large Language Models are all the hype right now. Amongst the things we can use them for, is the creation of digital personas, known as ‘griefbots’, that imitate the way people who passed away spoke and wrote. This can be achieved by inputting a person’s data, including their written works, blog posts, social media content, photos, videos, and more, into a Large Language Model such as ChatGPT. Unlike deepfakes, griefbots are dynamic digital entities that continuously learn and adapt. They can process new information, provide responses to questions, offer guidance, and even engage in discussions on current events or personal topics, all while echoing the unique voice and language patterns of the individuals they mimic.

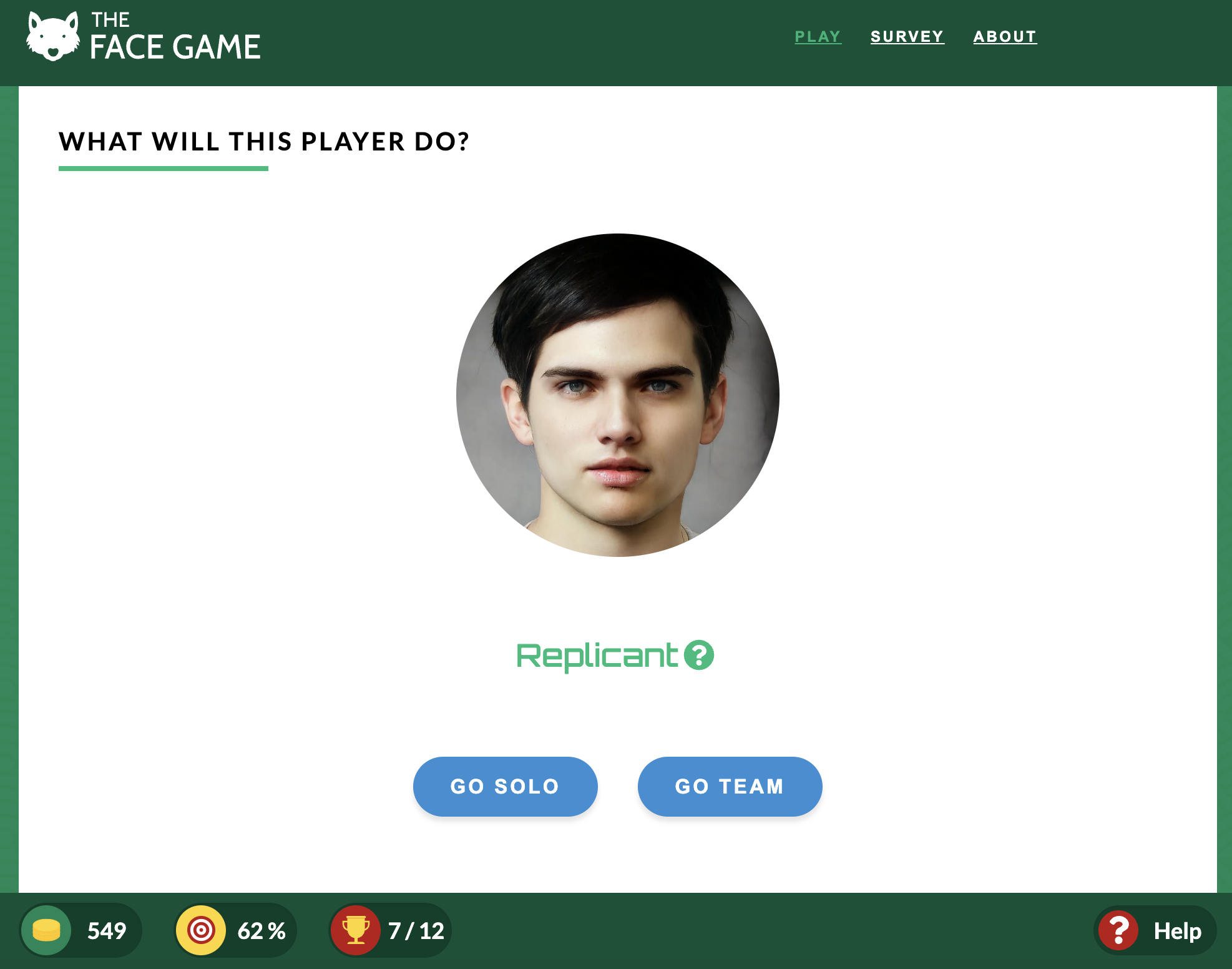

Numerous startups are already anticipating the growing demand for digital personas. Replika is one of the first companies to offer griefbots, although now they focus on providing more general AI companions, “always there to listen and talk, always on your side”. HereAfter AI offers the opportunity to capture one’s life story by engaging in dialogue with either a chatbot or a human biographer. This data is then harnessed and compiled with other data points to construct a lifelike replica of oneself that can then be offered to loved ones “for the holidays, Mother’s Day, Father’s Day, birthdays, retirements, and more.” Also, You, Only Virtual, is “pioneering advanced digital communications so that we Never Have to Say Goodbye to those we love.”

Read More »On Grief and Griefbots